Thursday, January 31, 2008

When the obvious ain't obvious, state the obvious

When people do not understand "edit", they are not invited to edit. When people do not understand "Log in / create account" what do you expect them to do? Without localisation we are lucky when people find an article and will read it. This not all that we want; we want people to expand on stubs, correct mistakes get involved. For this localisation is the most essential requirement. For all languages but English localisation is the enabling factor.

Betawiki is the most relevant MediaWiki localisation effort. It is not just for one project, it is not just for the MediaWiki software, it is not just for MediaWiki and the extensions as used by the Wikimedia Foundation. It is for MediaWiki and all its extensions.

BetaWiki is an extension itself; this allows other people to use the software if they choose. The friendly developers of BetaWiki help people implement localisation in their software. This is essential because localisation is an architecture, it is not something that you bolt on as an after thought.

I consider Betawiki a success, and I am happy that Unesco agrees with me. The statistics show clearly how much still needs to be done. We have not even started to reach out to our readers of most of the languages we publish in. Betawiki is a means to an end. In the end we will have people maintaining the localisation for all the languages we support.

To make it really obvious, we need you to help with the localisation of your language.

Thanks,

GerardM

Tuesday, January 29, 2008

Firefox is doing well

Firefox is getting ready for the release of the new Firefox 3.0. I have been using the beta 2 version for some time now and, this works out really well for me.

The best of the blog was in the end. Here they indicated that Firefox is doing so well because of the number of localisations. With 40 localisations and several languages being in beta, Mozilla is better at reaching out then Microsoft.

When this is the yardstick to measure by, MediaWiki is a league of its own. BetaWiki supports the localisation of 259 languages. More then 90% of the messages have been localised for 48 languages and for 85 languages better then 90% of the most relevant messages have been done.

What I find exciting is that particularly the languages from India are improving. A lot of work has already been done for Bengali, Telugu, Malayam and Marathi. We hope that this will remove one of the hurdles for the people from India and Bangladesh to use Commons.

Thanks,

GerardM

Monday, January 28, 2008

What if a language just does not have the word for it?

Thanks,

GerardM

Sunday, January 27, 2008

The Maithili language and the Mithilakshar script

A request was made for a Wikipedia for Maithili, it conforms to the requirements so that is not a problem. What IS a problem is that Mithilakshar, the script used to write the Maithili language, is not yet part of Unicode. The script has not even been recognised in the ISO-15924 yet.

This is the second request for a Wikipedia where the script that is used to write a language presents a problem. For modern Maithili there is the option to write in the Devangari script.

Thanks,

GerardM

Friday, January 25, 2008

Low hanging fruit

When you look at modern orchards, you see something different. The trees are no longer allowed to grow tall. All fruit have become low hanging fruit. Consequently, when you plan on some activity, and you do it in the classical way you may find low hanging fruit. When you find a way to think outside of the box, make this paradigm shift, you may have only low hanging fruit.

Thanks,

GerardM

Thursday, January 24, 2008

Maria Catlin-a

Bèrto is Piedmontese; he loves his language and he wants his daughter to learn to speak Piedmontese. When you are the only one in a big place like Kiev, how do you do it. Bèrto is the guy behind the i-iter website, a website where people can call in and leave a recorded message that can be heard on the website. This service is very much to help people overcome their reluctance in voicing or writing in their language.

Bèrto told me that he is now going to ask people to record stories for Maria Catlin-a. Bedtime stories, fairy tales stories that Berto can have his daughter listen to. Stories that will help her to learn Piedmontese..

I love the idea.

Thanks,

GerardM

Wednesday, January 23, 2008

Pashto

In BetaWiki we try to offer the best possible user interface and consequently, when people say that they speak Pashto, we need to know what is meant by that. When it is indicated that they speak Pashto, meaning the official language of Afghanistan, we assume they write Southern Pashto. The feedback that we got that there are several dialects but that there is only one form of written Pashto.

The Ethnologue information on Northern Pashto says something different. It indicates that there is a rich literary tradition in this language. Consequently, we cannot say that written Pashto is all encompassing and this makes the use of the ps code for Pashto problematic. On the other hand the people who tell us that they speak Pashto do not appreciate that there language is split by people from the west.

What to do? The best what we can do is ask some people that are in the know. In the mean time we are happy that in BetaWiki the localisation for Pashto is improved. But we do need to know how to deal with this.

Thanks,

GerardM

Tuesday, January 22, 2008

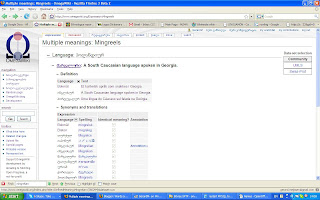

Mingrelian

Mingrelian is a language spoken in Georgia. Ethnologue indicates that some 500.000 people speak the language. We have added this language in OmegaWiki, and the cool thing is the way the language is started. It was started with the import of some sixty language names.

Mingrelian is a language spoken in Georgia. Ethnologue indicates that some 500.000 people speak the language. We have added this language in OmegaWiki, and the cool thing is the way the language is started. It was started with the import of some sixty language names.As we have the language enabled on the MediaWiki level, it is now also possible to present the language names in Mingrelian. The way the MediaWiki UI is defined, is that Georgian is to be used as the fall back language. The OmegaWiki software uses the MediaWiki 1.10alpha (r26222). What we can expect when we update MediaWiki is that the localisation will be improved. Many wikis will benefit in this way.

BetaWiki is the MediaWiki wiki for localisation. It is also the place where really cool experiments are taking place. I noticed the other day that they have this cool widget where you can select your language by just starting to type its name. For me it changes the content really nicely into Dutch. Having something like this for Commons ....

Thanks,

GerardM

Sunday, January 20, 2008

Noumande (not nrm)

When the Alemannic Wikipedia was created, it was fully enabled in MediaWiki. This is not the case for the Norman Wikipedia, its language file only exists in the project. In order to support this project, some programming needs to be done. The BetaWiki programmers are however of the opinion, an opinion that I share, that this should not be done because the nrm code they occupy, is the code for Narom, a language spoken in Malaysia.

These two languages are treated differently. For both linguistic entities some people chose to ignore that there was no proper code. Both currently exist as a project but technically it is not possible to support them completely with the codes they abuse.

So how to deal with this. For a consistent localisation of MediaWiki it is important that we use a coding system that will not bite us. When properly implemented, the ISO-639 codes will not bite us. However it does mean that we have to fix what is broken.

On BetaWiki we have had a request for the localisation of Erzgebergish; no new project is asked for, just the localisation is requested. A request will be made, we already know that it is likely to succeed. This can also be done for Norman. When Norman is considered a dialect, it is certain that we can get a code. I do not know if Norman would be considered a language but this is something that can be discovered. With a proper code, we can have the localisation done at BetaWiki.

For Alemannic it is more complicated. It is not one language, it is several languages. What I do not know is to what extend it maps with what Ethnologue calls Alemannic ...

Thanks,

GerardM

Promoting BetaWiki

The continued need for better localisation is best illustrated by numbers. According to the SiteStatistics, the WMF supports 258 languages. In the Incubator is a long list of requested projects many representing new languages waiting for a place under the sun. BetaWiki supports 257 different localisations. This is not the same as 257 languages, Chinese alone represents at least four languages.

What is also important is to consider the quality of the localisation. Only 128 of the 257 localisations have more then 50% of the most relevant messages localised and only 55 are better then 99%. For all MediaWiki messages 98 localisations do better then 50% and 56 are better then 90%. For the extensions used in the Wikimedia Foundation 31 do better then 50% and 15 do better then 90%.

When this is news to you, it may be grim reading. It reads like we are letting our readers and editors down. When you are looking for bright spots, there are plenty to find. We now have the numbers that shows our performance when it comes to localisation. We have numbers showing our progress; more languages are supported and an upwards trend can be observed in the other numbers. Personally I am happy to observe that the policy of the language committee is proving its value.

What we need is more people caring for the localisation for their language. When this localisation is done in BetaWiki, not only will people benefit from an environment that is made with them in mind, they will even get pointers indicating what messages are used for. The messages are committed daily to SVN and this makes the messages go life as soon as Brion updates the life systems.

We need to promote BetaWiki, we need to spread the message. Please help us promote BetaWiki, spread the word and check out what you can do for your language.

Thanks,

GerardM

Friday, January 18, 2008

OmegaWiki in Arabic

Yesterday I posted that the system messages of OmegaWiki were imported in Betawiki. Today I learned a new trick; how to import these messages from SVN. I am thrilled that I can show you the first results. It is wonderful that we have our content in Arabic as well.

Yesterday I posted that the system messages of OmegaWiki were imported in Betawiki. Today I learned a new trick; how to import these messages from SVN. I am thrilled that I can show you the first results. It is wonderful that we have our content in Arabic as well.The next thing that we really want is to show the same information as it should be shown; in a right to left orientation ... :)

Thanks,

GerardM

Wednesday, January 16, 2008

BetaWiki meets OmegaWiki

The messages that existed in OmegaWiki have been imported. As some OmegaWiki messages already existed in BetaWiki it is gratifying to see that some localisations already existed that we did not know about.

Thanks,

GerardM

Sunday, January 13, 2008

Localisation of the MediaWiki user interface

It is important for the people of existing projects that the localisation is done centrally because this allows them to use their language in the user interface of Commons and other projects. Currently the localisation for many languages leaves something to be desired. This makes it harder for the people requesting new projects to fulfill this requirement.

BetaWiki has proven itself as a great facilitator for the MediaWiki localisation. It has a smooth web interface, it supports off line work by providing "gettext" or ".po file" import and export. I do urge people to support their language.

We are in the process of providing more stimuli to the localisation effort, I hope to inform you about this in the near future.

Thanks,

GerardM

Saturday, January 12, 2008

Providing information when there is little or none

The aim of Wikipedia is to provide encyclopedic information. The aim of the Wikimedia Foundation is to provide information. Consequently the goal of the WMF is broader then the goal of Wikipedia. The implications are not often considered. An other issue is that information provided is provided in a Wiki. This means that information is not necessarily complete and correct and also that information provided in one language can be and typically is substantially different in another.

When the aim is to provide information to the people of this world, it stands to reason that we want to provide the best information available. Sadly we are not able to provide the same quality information to all people in all languages because the quality will not be the same in all Wikipedias ever. When this is a given, the first line of business should be how can we provide the best available information to people. When people are looking for information in a Wikipedia, they are looking for information in their language. When there is no article, they draw a blank. This is the space where a lot of improvements are possible. This is an issue that is particularly relevant to the less resourced languages.

The first thing to appreciate is that a person often knows more then one language. This combination of languages can be anything. The challenge is to have a graceful fall back to information that is either less informative or qualitative until the point where we provide pointers to information in another source of information. The way you can move from one Wikipedia to one in another Wikipedia is by way of the "interwiki links". The information can be seen as lexical in nature with a twist. Disambiguation of homonyms is a requirement. The least information in an article is a stub. A stub can contain an "info box" and some lines of text. A stub can be written by hand in the wiki and it can be put on the Wiki by a bot. A text can be translated by hand and by machine. All these things bring there own issues. Key in the understanding is that it takes an article in order to have an interwiki link.

To reduce things to the least information we want to provide, you are left with the concept, a definition and links to information in other languages. These translations can be linked to Wikipedia articles in the languages of the translation. At this level we can provide information that is not language dependent; for instance a photo of a horse is a picture of a horse in any language. When a concept is related to other concepts, we can show these relations with the concepts preferably in the language of the reader.

Encyclopedic articles come in different states of development. A well written article on a subject in one language may be not much more then a stub in another or not exist at all. Articles can be translated by hand or by machine and in this way information can be provided. A better start for reading and editing is provided is available in this way then with a stub.

Stubs, bot created articles and translations are seen by some as problematic while others see their value. They gives rise to a constant amount of sniping with new arguments or old arguments presented as new. They distract from what we are about; we are about providing the best information we can. There is no such thing as "the" Wikipedia as there are many. Consequently the quality standards that apply to one should not be applied to another. On an intellectual level this is understood by most but regularly people find new "problems".

One recurring theme is the number of articles; people feel offended when a projects has too many bot-created articles or machine translated articles. It is felt that it is unfair; it denies the value of all the human effort that went into their projects. In many ways the arguments are similar to the ones about "Final version" and consequently the solution can be similar. When bot created articles and MT articles go into a separate namespace, they are not counted as an article. Basic information is provided and, these articles can be improved with "interwiki links" and provide a route to information in other languages. When these articles are expanded or proofread by a person, they can be moved into the main name space.

The benefit of this proposal is that we will provide more information in more languages. Most of the arguments of the exclusionists have a reasonable reply and the work people put into the creation of more information in their language has found a place.

Thanks,GerardM

Friday, January 11, 2008

Site Matrix - BetaWiki extension of the week

It is a real eye opener. In the past many wikis have been created in anticipation of a future need, a need that never materialised. These projects have been just idling for someone to come along and start a project. In 2007 many projects that did not have any life in them were locked and deleted. There is a long list of discussions on Meta to close even more projects. I would argue that projects like the bm.wiktionary are closed as well.

I find it odd that I argue for the closure of projects...

Hmmm

Gerard

Thursday, January 10, 2008

A map with a difference

This is a map of the USA. It has been uploaded to Commons and consequently it is now freely licensed. This map has created some excitement because good educational material for the deaf is in short supply. The text that you find on the map is in ASL and the characters are SignWriting.

This is a map of the USA. It has been uploaded to Commons and consequently it is now freely licensed. This map has created some excitement because good educational material for the deaf is in short supply. The text that you find on the map is in ASL and the characters are SignWriting.For American Sign Language a request has been made for a Wikipedia. The language committee of the WMF has so far not approved, nor denied it. We want to approve it; it has an ISO-639-3 code (ase), there are sufficient native speakers supporting the project... It has not been approved yet because it is technically not possible to support ASL in MediaWiki.

Putting ASL and SignWriting on the map in a Wikipedia of its own is really important. It will not only make a difference for the people who do ASL, it will also signal to people in other countries, speaking other sign languages that writing in their own language is something that can be done. This will make a real difference in the emancipation of sign languages.

It is for this reason that I am thrilled that Valerie Sutton wrote that they will be looking into the possibility of an extension to MediaWiki to make it possible and have a Wikipedia.

Thanks,

GerardM

Chemical elements - finding information on the Internet

For many of these translations a Wikipedia article exists. But how can we provide information to people who do not speak one of the languages that we have a Wikipedia article for? How can we find information in the language of these people? The first port of call is Google. Google does a great job, they process daily terabytes in order to provide everyone with the ability to find what exists on the Internet. When there is information on the Internet, a word like lithium exists in at least five languages. When you are looking for information in Czech, it is likely to be drowned in information in English. A sophisticated user knows about Google's advanced search. However many languages do not exist on this list... languages like Bengali, Hindi or Swahili...

Google cannot find it yet. One of the reasons why they cannot find it is because the documents on the Internet do not tell in what language they are written. You have to have smart routines that distinguish one language from the next. It would be much easier if this information was entered with the document at the source. Sadly all web tools are not able to do this properly.

Wikia has entered the search business. They want to be open, transparent and as I learned from within Wikia, they want to be multi lingual in a few months. I will be SO happy if they allow people to tag information on the Internet that is important to them as being in a specific language. Because this will allow us to point to documents that exist on the Internet.

Thanks,

GerardM

Wednesday, January 09, 2008

Just another great day

Thanks,

GerardM

Tuesday, January 08, 2008

CentralAuth and FlaggedRevs

There may be all kinds of reasons why they have not gone live yet, I am happy to say that localisation is not one of them as the localisation has been done for many languages. There are few practical things that we can do to make a polite point that we would really really really have these extensions go live. Localisation is such a polite way.

I invite you all to check the statistics and if your language is not there yet goto BetaWiki and help us localise the 59 messages for CentralAuth and the 122 messages for FlaggedRevs.

Please Brion, consider this as well in your planning ...

Thanks,

GerardM

Thursday, January 03, 2008

MediaWiki localisation of Marathi

73.99% of the most important MediaWiki messages for Marathi are now localised. As not all of the most important messages of MediaWiki, MediaWiki is not really useful out of the box. One of the ways the localisation can be improved, is by importing messages from projects. SPQRobin imported some 500 more messages from the mr.wikipedia and after running several sanitizing scripts, they were committed.

SPQRobin does not speak Marathi but it is probable that he did a great job. It would be good if someone has a look at the Marathi messages. Hopefully not only to check the existing messages, but also to work on the messages that still need to be done.

Importing from a project is one of the obvious methods of enriching the MediaWiki localisation. Proof reading the localisation is important and will improve the quality of the MediaWiki experience. Hopefully someone will come along and do this for Marathi

With the BetaWiki developers actively looking at improving the MediaWiki localisation, allowing MediaWiki to already have five separate forms for a plural, it may be that languages like Marathi or Hindi have their own needs. :)

Thanks,

GerardM