The #Localisation team consists of people who are used to each other. We worked together for a long time and now it is a job. With a job come dreary things, things like time management in order to be paid but it makes available time to spend on the real issues that are so dear to our heart.

With more time and with being part of the team of MediaWiki developers all kinds of things change and need to be addressed. As an amateur (ie non paid professional) you choose what you want to be involved in. Siebrand did the project management as well as being the working "first among equals" and in reality he has been herding cats.

The carrot and stick approach worked well; if you code this it will go live soon or if you localise these messages first, your Wikipedia will be more usable. Making sure that the environment was optimal for getting the jobs done has always been part of project management.

Now that we are a team intending to work with best industry practices, it is wonderful that the Wikimedia Foundation knows about best practices and intends to implement them in the various disciplines in the organisation. For software there is agile and we have the good fortune to have two days of training by Hai from ThoughtWorks.

The good news is that as a result of implementing an agile management style, we will have frequent updates of language related software. We will have a lot of communications particularly with the language support teams we are setting up about functionality and requirements (both ways) and we expect that as a result many great languages will get all the readers and editors they deserve.

Thanks,

GerardM

Friday, September 30, 2011

Thursday, September 29, 2011

#Wikimedia #mobile #statistics

The MobileFrontend has replaced the old Ruby software and it is doing well. The amount of traffic it is handling continues to grow and the software is coping well. The development was concentrating on replacing the existing functionality and as it is freed of these shackles, new opportunities arise.

The MobileFrontend has been available for testing for a considerable time at the bottom of all Wiki pages and what did not happen was include this in the mobile statistics. This is sad not only because we did not only learn how much it was used during the test period, it did not start recording the data when it became used for real.

When the data is obviously, utterly wrong, it is a good practice to approximate what the data would have been. While such data is not absolutely right, it does provide workable data. It does provide the information management can rely on.

As it is there may be other data that is still not measured. There are mobile apps that use the API to request data. Do not forget that MobileFrontend does now support Wiktionary, Wikibooks, Wikisource and all the other projects as well...

Thanks,

GerardM

The MobileFrontend has been available for testing for a considerable time at the bottom of all Wiki pages and what did not happen was include this in the mobile statistics. This is sad not only because we did not only learn how much it was used during the test period, it did not start recording the data when it became used for real.

When the data is obviously, utterly wrong, it is a good practice to approximate what the data would have been. While such data is not absolutely right, it does provide workable data. It does provide the information management can rely on.

As it is there may be other data that is still not measured. There are mobile apps that use the API to request data. Do not forget that MobileFrontend does now support Wiktionary, Wikibooks, Wikisource and all the other projects as well...

Thanks,

GerardM

Happy Rosh Hashanah and happy Dussehra

Today at dusk it will be Rosh Hashanah (ראש השנה). Amir my colleague is looking for pomegranates, something traditional to eat at this time.

Today at dusk it will be Rosh Hashanah (ראש השנה). Amir my colleague is looking for pomegranates, something traditional to eat at this time.Alolita my colleague told me that the Dussehra (ಮೈಸೂರು ದಸರ) ten day festivities also started today.

As this is the day when the Localisation team is together for the first time, I am happy and I wish you all a joyous day wherever you are, whatever you do.

Thanks,

GerardM

Wednesday, September 28, 2011

Presenting Sue's barnstar

Sue, the #Wikimedia Foundation director presents her own barnstar to deserving Wikimedians. Guess what, she speaks English and wants to present her barnstar to people of all projects and all languages who deserve recognition.

As I am in the Office for a week, I have been given the honour to present you "the program" and explain what else Sue gets out of it.

What Sue does is test the software and it works for her best when it is fully localised. She is looking for people she does not know personally and therefore is looking for people from the community to make suggestions to her. Sue likes it best when there is a story attached to the suggestion.

Thanks,

GerardM

As I am in the Office for a week, I have been given the honour to present you "the program" and explain what else Sue gets out of it.

What Sue does is test the software and it works for her best when it is fully localised. She is looking for people she does not know personally and therefore is looking for people from the community to make suggestions to her. Sue likes it best when there is a story attached to the suggestion.

Thanks,

GerardM

Old documents presented well

A #German #MediaWiki wiki that specialises in old documents beats the Wikimedia Foundation to the punch and implemented the WebFonts extension.

Santhosh helped with some of the finer details of implementing the Fraktur font, but as you can see using Webfonts for source material is quite powerful.

It also allows us to transliterate the Dead See scrolls and present the text in a Font that is similar to the written original. Once it is transcribed, it becomes easy for linguists and theologians to compare the old with the new.

Transcribing is an activity that is very much anyone can do. Being able to support a text with appropriate fonts is something where we will be able to excell.

Thanks,

GerardM

Santhosh helped with some of the finer details of implementing the Fraktur font, but as you can see using Webfonts for source material is quite powerful.

It also allows us to transliterate the Dead See scrolls and present the text in a Font that is similar to the written original. Once it is transcribed, it becomes easy for linguists and theologians to compare the old with the new.

Transcribing is an activity that is very much anyone can do. Being able to support a text with appropriate fonts is something where we will be able to excell.

Thanks,

GerardM

Monday, September 26, 2011

Being a newbie ... it sucks

I have to post messages to #Wikipedias. I am doing this with my Wikimedia Foundation profile and I am not happy. I am treated as if I am stupid. I cannot add new topics to discussion pages, I can not even edit the discussion pages I need.

There is a very easy solution for me; I can ask for global sysop rights as I am "staff" however, that is cheating. It is more fun to start yelling because the restrictions on newbies are so ... off-putting.

What is the point of not allowing to enter new topics ... It is stupid!

What is the point of not allowing to edit a discussion page ... It is stupid!

Guess what I would be doing if I did not make a career out of it? I would walk not to return.

Thanks,

GerardM

There is a very easy solution for me; I can ask for global sysop rights as I am "staff" however, that is cheating. It is more fun to start yelling because the restrictions on newbies are so ... off-putting.

What is the point of not allowing to enter new topics ... It is stupid!

What is the point of not allowing to edit a discussion page ... It is stupid!

Guess what I would be doing if I did not make a career out of it? I would walk not to return.

Thanks,

GerardM

September 26

In Finland today is the official name day for Kuisma, Finn, Gáivvaš and Johannes, Juhani, Juho. Finland is not the only country with name days. The fun thing is that people can say that it is "Kuisma day" and expect you to know that it is September 26. Well that is to say, when you are Finnish.

When your language is different, the name days are different or they may not exist at all. So what to do. How do you deal when you translate a text that includes name days? Or is it just that way? Should name days be considered as an open standard because they contain public facts or can they be proprietary..

When name days are to be part of a standard, it would make sense for them to be part of a standard like the CLDR. On the other hand, there is so much data that is still missing. Does it make sense to add even more to the CLDR?

Thanks,

GerardM

When your language is different, the name days are different or they may not exist at all. So what to do. How do you deal when you translate a text that includes name days? Or is it just that way? Should name days be considered as an open standard because they contain public facts or can they be proprietary..

When name days are to be part of a standard, it would make sense for them to be part of a standard like the CLDR. On the other hand, there is so much data that is still missing. Does it make sense to add even more to the CLDR?

Thanks,

GerardM

Going to San Francisco

The #Wikimedia Localisation team will be going. I doubt it is wise for us to wear flowers in our hair as the song suggests.

Localisation and Internationalisation is very much part of any and all software development. For MediaWiki this is established principle; updates to the software go to translatewiki.net on a daily basis and the text of messages is often improved to allow for localisation.

Staying at the Wikimedia Office will allow us to improve our contacts and consequently improve our effectiveness. Yes, we will have "some" fun but without it it is just a job and we are passionate about our mission.

One thing that is REALLY exciting is that we will discuss how and when to bring the WebFonts extension into production. For me this is like a dream come true.

Thanks,

GerardM

The #Wikimedia deployment window

There are several teams of #MediaWiki developers. The work done by a team is often related and consequently it makes sense to deploy new and amended software together.

Deploying code is an art in its own right. At the Wikimedia Foundation it is very much an art for those people who have advanced skills in software review. When software goes live, it may work in a test environment but at Wikipedia it hits high traffic. This can break code and the persons who deploy are all too aware of this little gotcha.

The Localisation team has its own deployment window and the team is informed about what is planned to release. They can comment, add to it and even remove items from the list. For your amusement, this is this weeks list:

GerardM

Deploying code is an art in its own right. At the Wikimedia Foundation it is very much an art for those people who have advanced skills in software review. When software goes live, it may work in a test environment but at Wikipedia it hits high traffic. This can break code and the persons who deploy are all too aware of this little gotcha.

The Localisation team has its own deployment window and the team is informed about what is planned to release. They can comment, add to it and even remove items from the list. For your amusement, this is this weeks list:

We have planned for the following code to be deployed. I suggest weThanks,

only put it in 1.18wmf1, and do not backport it to 1.17wmf1 to reduce

complexity.

http://www.mediawiki.org/wiki/Special:Code/MediaWiki/tag/ i18ndeploy

Contains revs (all reviewed - tag can be removed when backported/deployed):

97606 - Narayam bug fix in keybuffer

97609 - Narayam Nepali update

97644 - L10n fix for upload wizard

97793, 97804 - Number grouping updates for many Indic languages

97962, 98006 - More flexible Language::formatTimePeriod()

97982 - WebFonts for Sanskrit (WebFonts is not yet deployed on

Wikimedia, we need to plan for that. How? - backport to 1.18 in any

case)

98023 - Babel i18n bug fix

GerardM

Sunday, September 25, 2011

Babel #localisation is now a priority

All #Wikimedia wikis have the Babel extension enabled. Slowly but surely people are starting to use it and it does already support many many languages.

For the Lezghian language there is a Wikipedia being developed on the Incubator. As a consequence there are Wikimedians who know Lezghian and it does make sense when they can find each other.

When this extension is localised at translatewiki.net, the Lezghian portal and the Babel information on user pages will be looking great. Once the localisations are enabled for Lezghian on the WMF wikis, you can indicate your fluency for this language and other languages as well. When the localisations are a bit off, you can update them at translatewiki.net and, the next day you may be able to notice the difference.

Thanks,

GerardM

For the Lezghian language there is a Wikipedia being developed on the Incubator. As a consequence there are Wikimedians who know Lezghian and it does make sense when they can find each other.

When this extension is localised at translatewiki.net, the Lezghian portal and the Babel information on user pages will be looking great. Once the localisations are enabled for Lezghian on the WMF wikis, you can indicate your fluency for this language and other languages as well. When the localisations are a bit off, you can update them at translatewiki.net and, the next day you may be able to notice the difference.

Thanks,

GerardM

#MediaWiki #RTL support when upgrading to 1.18

Amir is a Wikimedian and a linguist who quickly gained expertise on how to support RTL languages like Arabic and Hebrew. Not only did he add many bugs in bugzilla, he provided us with a lot of fixes as well. His latest project is supporting the upgrade for RTL to release 1.18. This contains RTL fixes by him and by SPQRobin.

Enjoy,

GerardM

Enjoy,

GerardM

Administrators can thoroughly customize every MediaWiki based wiki by editing CSS and JS files, such as MediaWiki:Common.css, MediaWiki:Common.js, MediaWiki:Vector.css etc. These customizations are usually requested by the editors community and fall into two general categories. The first category are changes to the design of the site, such as setting a different background image, a festive logo, a different font or a different color for the revision comparison (diff). The other category works around bugs until they are solved in the core code.

Customizations may need to be updated by the local site admins specially after the deployment of a new version or MediaWiki or of a customized extension. Sometimes old customizations that worked around bugs just quietly stop working after the bug is resolved. They don't affect the site much, but they fill up Common.css with now useless code that makes maintenance much harder, so it is best to remove them. Sometimes changes in the software break the customizations and the site appears broken to the users - in this case they MUST be removed or updated.

MediaWiki 1.18 was rolled out to several wikis a few days ago. Among them was mediawiki.org, the website about the software itself. MediaWiki 1.18 has many updates for the handling right-to-left (RTL) text, especially on pages where it is mixed with left-to-right text. Before the upgrade mediawiki.org had several customizations to fix the display of RTL text in the tabs on the top of the page. After the upgrade this text appeared badly until they were removed.

All the other Wikimedia wikis will be upgraded next. If you are an administrator in any Wikimedia wiki, you should look at these customization files as soon as possible and also at the release notes of MediaWiki 1.18. Understand the purpose of the existing customizations and be prepared to remove the ones that aren't needed anymore immediately after the upgrade. A general rule of thumb is to take an especially good look at lines that have "rtl", "ltr", "right", "left" and "bidi" in them.

If you need more help understanding these files, contact Amir Aharoni preferably on IRC.

-- Amir

Saturday, September 24, 2011

Q&A with the #MediaWiki #bugmeister

As more developers are working on the MediaWiki software, keeping a grip on the outstanding issues becomes more then a day job. Mark Hershberger has as its task to manage the needs of the community and does this in a hands-on fashion by bringing focus to the bugs reported in Bugzilla.

He has been doing it for a while now and this interview may give you an idea why having a bugmeister is a great idea.

Enjoy,

GerardM

He has been doing it for a while now and this interview may give you an idea why having a bugmeister is a great idea.

Enjoy,

GerardM

Can you explain what a bugmeister does

The main idea of a bugmeister, is clear from the posted job opening. This basically says that the bugmeister acts to bring the community's issues to the attention of WMF Project Managers and Developers. In addition to making sure the community's needs are heard, I've also worked recently to get the developers from the community involved in WMF activities. For example, I'll be hosting a fund-raising-focused triage in a few weeks where community input is wanted.

There seems to be more work then can be done by one bugmeister, is it true that by focusing on what needs the most tender love and care, you pick from the low hanging fruit ?

There are a lot of bugs in Bugzilla (over 6000 open issues right now) so I admit that bug-solving is driven somewhat by the squeaky wheel since those are the issues the community has shown the most interest in. I do want to chew through those other bugs but I think that focusing on the new bugs and most-often referenced old bugs will get a lot of these bugs handled.

WMF is very much into agile development, is a bug triage something that you do at the beginning or at the end of a sprint

Good question. I'm not sure I have an answer, but let me try.

Part of the purpose of triage -- at least how we're using it at the WMF -- is to understand problem reports and how to solve them and, after that, to assign them to a team or a person to work on.

So, as far as I understand sprints, triage would happen at the beginning.

Identifying and assessing the new bugs is what I understand you do. How does this fit into the process of fixing bugs

This varies with how high a priority the bug gets. For a quick overview of the time-line of my involvement time-line see the documentation on MediaWiki.org.

For example, bugs that are "highest" priority get very close attention. "high" priority bugs less so, and so on.

Every week there are more new bugs then there are bugs that are marked as fixed, how significant is this?

First, the weekly bug report isn't completely accurate -- it has some bugs. For example, I noticed recently that it had reported 594 bugs created in one week when a query shows only 122 were created that week.

Second, it is normal for projects with open bug reporting tools to have more bugs created in a given period than closed. I'm having trouble finding the report at the moment, but I recall seeing Mozilla Firefox had more bugs opened in a year then closed.

There is a perceived lack of people working on the review of software, can you comment

Over the past year Rob Lanphier has worked to get more people reviewing the code since, yes, we had too few people doing code review. We're in a lot better position now, but we still do not have the resources to review every bit of code that is in subversion. I'd rather make the code repository and code distribution available to anyone who wants to extend MediaWiki, since this is better than telling people "We can't review your code, so it isn't welcome!"

As an example of how code review has gotten better, Rob Lanphier and I have been doing a lot of work on getting code reviewed faster and code marked FIXME fixed faster as we ramp up to a 1.18 deployment. This is a big pain point since we want to make more frequent releases of MediaWiki and become, as you pointed out, more agile. And we don't want to release un-reviewed code.

I think, though, that if you look at the way FIXMEs have been resolved, or how new revisions have been reviewed, that you'll see some very good trends. In the past few weeks we've really been driving unreviewed code down pretty hard. I'm confident that we can continue to keep unreviewed code low.

Code not written by WMF employees is certainly reviewed -- especially if it is going to be deployed on the site or released as part of MediaWiki. Since we only have limited resources, we cannot review all code in our Subversion repository.

For example, we host Semantic MediaWiki and extensions, but this code is not used on any Wikimedia website and is not distributed as part of MediaWiki, so, in order to make sure that the code we do release reviewed, we have to ignore Semantic MediaWiki for the most part.

An example of MediaWiki code that is not used on the cluster, but that is reviewed is the new Installer for MediaWiki or code that supports databases other than MySQL (such as PostgreSQL). But, when it comes to Database code we're somewhat limited in our ability since we're so focused on MySQL, so we are looking to recruit volunteer reviewers who use and are familiar with the other databases that MediaWiki can use to help review this code.

Siebrand indicated that the presence of people from many disciplines was why the recent i18n bug triage was so successful. How does this mix with the small teams typical of agile?

Triages are more successful when more people are involved, true. Since a basic part of the Bug Triage, at least in the way I've been running them, is open community involvement -- I hold the triages on IRC and everyone is invited -- I don't think we're limited by small teams.

What is the measure of your success; is it in the volume of work done on bugs or is it in a changed way or working of the developing community or is it a mix of things

I think it is still too early to answer that question definitively. I'm actually working with a researcher to find out what sort of metrics we can find in the bug database to draw good conclusions about community involvement, # of bugs solved, and the like.

A function like a bugmeister seems far removed from the community editing on the projects, do you and how do you keep informed on what happens in the projects

I work with different community members to enable editing on the projects. Extending the functionality of a project like we did with the Google News Site Maps (GNSM) extension on wikinews is a big part of that.

This coming Wednesday, I am having a WikiBooks-focused triage with some people from that community that I met in the Collection extension triage. During this time we'll be able to find out how we can help their project succeed more.

What is your favourite MediaWiki project, what is the most challenging project

I admit a soft spot for WikiSource, but this is mostly because I think we could use it to provide a community-driven replacement for ReCaptcha. Google is using ReCaptcha to help the New York Times scan in old newspapers, but, as far as I can tell, they aren't making the result publicly available. I think we can do better.

Thanks,

Mark.

Thursday, September 22, 2011

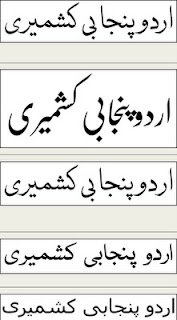

The difference #Urdu makes

When you learn to write, it matters where you learn this skill. You may learn to write in a different script, and the characters may look different from what is considered to be the standard. The examples to the right show fonts that are usable for the Urdu language. Some reflect how Urdu is written manually. for instance in the Nastaleeq style.

Of the examples to the right, the one at the bottom comes with some versions of Microsoft Windows, the others are developed with Urdu in mind by the Center for Research in Urdu Language Processing. As Urdu uses its own set of characters of the Arabic script, it is no wonder that Urdu has its own keyboard mapping as well. This is available from the same organisation..

Urdu is a good example that when you support languages, you cannot take too much for granted. It also indicates that as we develop software intended to support languages we will need people knowledgeable about their language. People who are willing to test the software we develop for all languages.

These same people may help us provide, amend and verify the information that exists in standards like the CLDR for their language. We need to build teams of people willing to support their language. This will not only help us get the best out of MediaWiki, it can have a much bigger impact when we do this well.

Thanks,

GerardM

Of the examples to the right, the one at the bottom comes with some versions of Microsoft Windows, the others are developed with Urdu in mind by the Center for Research in Urdu Language Processing. As Urdu uses its own set of characters of the Arabic script, it is no wonder that Urdu has its own keyboard mapping as well. This is available from the same organisation..

Urdu is a good example that when you support languages, you cannot take too much for granted. It also indicates that as we develop software intended to support languages we will need people knowledgeable about their language. People who are willing to test the software we develop for all languages.

These same people may help us provide, amend and verify the information that exists in standards like the CLDR for their language. We need to build teams of people willing to support their language. This will not only help us get the best out of MediaWiki, it can have a much bigger impact when we do this well.

Thanks,

GerardM

#Wikia, developing #MediaWiki is a community effort

Many organisations rely on MediaWiki and even though the Wikimedia Foundation provides a great product, it does not always provide exactly what is needed. Wikia is one organisation that has a track record of adding its own functionality on top of MediaWiki.

Wikia introduced functionality that is of a more general interest, and consequently Wikia and the WMF are working more and more together. As the Wikia software is localised at translatewiki.net, the functionality of the Translate extension and its reporting are important. It is important for Wikia to know how well the localisation for its software is doing.

To make things happen, Wikia produced specifications and wrote code that provides translatewiki with real time statistics. As the Translate extension is very much under development, the Wikia code had to be back-ported by Niklas into the live code. This would have been less of a job when the Wikia developers had used the WMF subversion.

To make things happen, Wikia produced specifications and wrote code that provides translatewiki with real time statistics. As the Translate extension is very much under development, the Wikia code had to be back-ported by Niklas into the live code. This would have been less of a job when the Wikia developers had used the WMF subversion.

It is wonderful to see how collaboration makes everybody a winner.

Thanks,

GerardM

Wikia introduced functionality that is of a more general interest, and consequently Wikia and the WMF are working more and more together. As the Wikia software is localised at translatewiki.net, the functionality of the Translate extension and its reporting are important. It is important for Wikia to know how well the localisation for its software is doing.

To make things happen, Wikia produced specifications and wrote code that provides translatewiki with real time statistics. As the Translate extension is very much under development, the Wikia code had to be back-ported by Niklas into the live code. This would have been less of a job when the Wikia developers had used the WMF subversion.

To make things happen, Wikia produced specifications and wrote code that provides translatewiki with real time statistics. As the Translate extension is very much under development, the Wikia code had to be back-ported by Niklas into the live code. This would have been less of a job when the Wikia developers had used the WMF subversion.It is wonderful to see how collaboration makes everybody a winner.

Thanks,

GerardM

Monday, September 19, 2011

#Unicode language names found by search engines

#Statistics are a wonderful thing. These numbers compiled by "Kaśyap కశ్యప్" show how often the name of a language can be found when they are written in Unicode characters. This gives a clue about how well a search engine serves a language.

Such numbers also provide an indication how much a language is actually used on the Internet. What is does not say is how much demand there is to use a language on the web.

The Wikimedia Foundation's Localisation team is working hard to make it easier to use MediaWiki wikis. For us it is interesting to compare these numbers with the number of speakers for a language because this may indicate how hard it will be to make a particular Wikipedia more popular on the Internet.

Thanks,

GerardM

Such numbers also provide an indication how much a language is actually used on the Internet. What is does not say is how much demand there is to use a language on the web.

The Wikimedia Foundation's Localisation team is working hard to make it easier to use MediaWiki wikis. For us it is interesting to compare these numbers with the number of speakers for a language because this may indicate how hard it will be to make a particular Wikipedia more popular on the Internet.

Thanks,

GerardM

Sunday, September 18, 2011

One language, two scripts

#Tamaziɣt is one of the #Berber languages of North Africa. It is written in two scripts; the Latin script and the Tifinagh script.

#Tamaziɣt is one of the #Berber languages of North Africa. It is written in two scripts; the Latin script and the Tifinagh script. As there is a project in the Incubator for a Tamazight Wikipedia, it is relevant to consider if and how the two scripts can be transcribed from one into the other. This is what SPQRobin has done; he has written a program that converts a text between these two scripts.

Transcription is not new to MediaWiki; Serbian and Chinese are two languages where articles can be available in two scripts. It is awesome when we can transcribe in both directions as this allows people to read and write in their preferred script. When a language is also written in for instance the Arabic script, this is impossible because vowels are often not indicated.

With both scripts supported, we hope that the converter will be soon available in the Incubator. This may stimulate more people to learn to write their language and contribute to the dissemination of all knowledge to all people.

Thanks,

GerardM

A tale of two cities

I met Fahraan, she is Kurdish, she speaks #Sorani and #Dutch. Fahraan is a refugee and has too much time on her hands.

Sulaymaniyah and Almere are two cities important to her; in Sulaymaniyah she was born and raised and she is now living in Almere. As a consequence, there are people in both cities that know Fahraan.

Fahraan often tells the story of her two cities. About Almere in Sorani and about Sulaymaniyah in Dutch. The suggestion for her to write proper articles about these cities intrigues her. The existence of a Wikipedia in Sorani was a revelation to her.

Teaching someone like Fahraan to edit Wikipedia is cool. She will improve her Dutch and she will write in Sorani. Teaching a group of people like Fahraan is even better; even when only one person writes the occasional article the smaller projects will be happy to welcome her.

Thanks,

GerardM

Sulaymaniyah and Almere are two cities important to her; in Sulaymaniyah she was born and raised and she is now living in Almere. As a consequence, there are people in both cities that know Fahraan.

Fahraan often tells the story of her two cities. About Almere in Sorani and about Sulaymaniyah in Dutch. The suggestion for her to write proper articles about these cities intrigues her. The existence of a Wikipedia in Sorani was a revelation to her.

Teaching someone like Fahraan to edit Wikipedia is cool. She will improve her Dutch and she will write in Sorani. Teaching a group of people like Fahraan is even better; even when only one person writes the occasional article the smaller projects will be happy to welcome her.

Thanks,

GerardM

#Wikipedia usage data is more complete

When you compile #statistics about the usage of Wikipedia, you need raw data to base your statistics on. The data was not complete and consequently the resulting statistics can be a little bit off.

Thanks,

GerardM

Ariel Glenn on Wikitech-l "I think we finally have a complete copy from December 2007 through August 2011 of the pageview stats scrounged from various sources, now available on our dumps server."Having more complete data will probably not change the understanding of the Wikipedia history that much. As Wikipedia celebrated its 10th anniversary, there is obviously more data that is missing. We do welcome any and all data that gives us even more of an insight.

Thanks,

GerardM

Friday, September 16, 2011

MobileFrontend is #MediaWiki release 1.17

The #mobile gateway has been replaced and it is running live. What is relevant is, that Wikipedia runs MediaWiki release 1.17. This is a patched version of the official release but it is release 1.17.

This factoid is relevant for people running their own MediaWiki project. It is more then likely that they can benefit from native support for mobile phones as well. When you consider how fast mobile traffic of Wikipedia is growing, it is a potential boon for any and all MediaWiki projects. It starts with installing the MobileFrontend extension and there may be some configuration issues to tackle as well.

We may all have wish lists for improved support for mobile phones. What I would like to see is something like the Djatoka software . I blogged about Djatoka in March 2010 however there are other similar solutions. It only sends images sized to fit on a screen. Given the cost of mobile bandwidth, it would be a money saver for our readers.

Thanks,

GerardM

This factoid is relevant for people running their own MediaWiki project. It is more then likely that they can benefit from native support for mobile phones as well. When you consider how fast mobile traffic of Wikipedia is growing, it is a potential boon for any and all MediaWiki projects. It starts with installing the MobileFrontend extension and there may be some configuration issues to tackle as well.

We may all have wish lists for improved support for mobile phones. What I would like to see is something like the Djatoka software . I blogged about Djatoka in March 2010 however there are other similar solutions. It only sends images sized to fit on a screen. Given the cost of mobile bandwidth, it would be a money saver for our readers.

Thanks,

GerardM

The name is Bon, James Bon II

Santhosh started work on an #Unicode UAX31 implementation.. Good for us because we support all the scripts for all the languages we have projects in. Our best practice has it that people can have a user in their own language.

The UAX31 standard defines the standard rules for our best practice. Writing a reference implementation that will actually be used is a bit different; the Wikimedia Foundation implemented a black list of names for instance.

Once you start writing an implementation, you encounter all the ambiguities, all the issues that are still open. How for instance do you cope with the Arabic script, what to do with a "." or a full stop in the middle of a user?

It makes sense to allow for the characters that are used in a language. This implies that knowing what language to expect is crucial. There are two obvious approaches; you expect a new user in the default language or the languages is defined in the preferences.

When you are interested in this subject have a read of the current version of the code.

Thanks,

GerardM

The UAX31 standard defines the standard rules for our best practice. Writing a reference implementation that will actually be used is a bit different; the Wikimedia Foundation implemented a black list of names for instance.

Once you start writing an implementation, you encounter all the ambiguities, all the issues that are still open. How for instance do you cope with the Arabic script, what to do with a "." or a full stop in the middle of a user?

It makes sense to allow for the characters that are used in a language. This implies that knowing what language to expect is crucial. There are two obvious approaches; you expect a new user in the default language or the languages is defined in the preferences.

When you are interested in this subject have a read of the current version of the code.

Thanks,

GerardM

Thursday, September 15, 2011

Too long; didn't read

I recently dumped #Techcrunch from my #reader. Techcrunch features too many long articles, articles with video, articles with long references to companies. It took me too long to scroll past them so I finally got rid of all of them.

Many of the blog posts I get presented in my reader are "information dense" and too long. Typically they pass me by. Some have gems of information hidden among pebbles of data. Those posts I trawl for information and you know what, they tend to provide me with popular topics for on my blog.

A blog post needs to be sweet and short; preferably with only one point. An illustration needs to support the story or hint at more to come. In the end, a successful blog tells stories by frequently returning to the same subject.

Thanks,

GerardM

Many of the blog posts I get presented in my reader are "information dense" and too long. Typically they pass me by. Some have gems of information hidden among pebbles of data. Those posts I trawl for information and you know what, they tend to provide me with popular topics for on my blog.

A blog post needs to be sweet and short; preferably with only one point. An illustration needs to support the story or hint at more to come. In the end, a successful blog tells stories by frequently returning to the same subject.

Thanks,

GerardM

Professional #localisation at #translatewiki.net

When you are going to implement an Open Source application, there are no warranties. When the usability needs improvement, you can pay for improvements or, you can scratch your own itch.

Mifos is going to be implemented in Andhra Pradesh. The easiest and most obvious usability improvement is localisation and as you can see from the graphic for Telugu localisations, a lot of work was done in an extremely short period of time by Avshyd.

When so much work is done, it is always of interest to how this was done.Was it a one-man show or were multiple people involved. Was there any quality control and finally what do the users think. As Mifos is implemented in Telugu, there will be people working with the software and they can complain when the localisation needs improvement.

At translatewiki.net we are happy when our localisations are used. We are happy when people come to us and do the work for their own reasons. In the case of Mifos, it will mean that many more people will have access to credit, microcredit at that but it is a huge step up.

Thanks,

GerardM

Mifos is going to be implemented in Andhra Pradesh. The easiest and most obvious usability improvement is localisation and as you can see from the graphic for Telugu localisations, a lot of work was done in an extremely short period of time by Avshyd.

When so much work is done, it is always of interest to how this was done.Was it a one-man show or were multiple people involved. Was there any quality control and finally what do the users think. As Mifos is implemented in Telugu, there will be people working with the software and they can complain when the localisation needs improvement.

At translatewiki.net we are happy when our localisations are used. We are happy when people come to us and do the work for their own reasons. In the case of Mifos, it will mean that many more people will have access to credit, microcredit at that but it is a huge step up.

Thanks,

GerardM

The roads of #India

One of the projects on the English #Wikipedia describes the roads of India. Given the size of India, the end result is something that can easily be called a book. As such it is not that strange when the book becomes a book that is available from PediaPress.

A book like this is not without its issues. How do you for instance make a NPOV map of India when the borders of India are in dispute or what to do when the generation of the book creates errors.

Making maps of India that are acceptable to all is problematic. India has border disputes with several of its neighbours and as a consequence Indian maps show where India expects its borders to be. The same is true for its neighbours and consequently the maps Google presents are different from where in the world you view them.

The law is often used to ensure the "right" presentation of borders and therefore a book like this will never be commercially available in India.

Getting attention from the right people is (relatively) easy to do; you create a bug in bugzilla and / or you talk to people involved in the off line functionality on IRC and / or you scratch your own itch and dive into the code.

Thanks,

GerardM

A book like this is not without its issues. How do you for instance make a NPOV map of India when the borders of India are in dispute or what to do when the generation of the book creates errors.

Making maps of India that are acceptable to all is problematic. India has border disputes with several of its neighbours and as a consequence Indian maps show where India expects its borders to be. The same is true for its neighbours and consequently the maps Google presents are different from where in the world you view them.

The law is often used to ensure the "right" presentation of borders and therefore a book like this will never be commercially available in India.

Getting attention from the right people is (relatively) easy to do; you create a bug in bugzilla and / or you talk to people involved in the off line functionality on IRC and / or you scratch your own itch and dive into the code.

Thanks,

GerardM

#MediaWiki Babel scheduled to go live

Translatewiki.net has supported the Babel extension for a long time now. Practically everyone who contributes has this information on their user page and the projects someone contributed to is added as well.

The big advantage of the Babel extension is that it works without templates and that new messages are available every day with the LocalisationUpdate.

Several projects have requested the implementation and, when Babel becomes generally available, it will be easy to add relevant information on the user page without the painful copying of a zillion templates.

With the implementation scheduled for September 21th, this is surely happy news.

Thanks,

GerardM

The big advantage of the Babel extension is that it works without templates and that new messages are available every day with the LocalisationUpdate.

Several projects have requested the implementation and, when Babel becomes generally available, it will be easy to add relevant information on the user page without the painful copying of a zillion templates.

With the implementation scheduled for September 21th, this is surely happy news.

Thanks,

GerardM

What to do to fix #MediaWiki #language bugs

When we know what issues need to be addressed, we analyse them, we categorise them and then we ask who wants to fix them. It sounds simple and actually, it is a great way to get more people involved.

A bug triage has been held for the second time and this time two new people demonstrated their interest in working on language issues.

The process is quite cool; Siebrand really explained the issues in a bug, he indicated what skill level is needed and, what benefits there are to solving a bug. Bug 16175 for instance has to do with the EditPage.php class, something Roan qualified as nasty and Bawolff as something that scares him late at night.. Fixing it may result in a need for many new messages that need urgent localisation. The upside is that it is a great introduction to some of the hard parts of the MediaWiki code.

Not everything was hard; some people indicated that they wanted to get involved without working on code. For them there were changes to the language of messages, the documentation of messages. The bug triage truly provided quite diverse opportunities.

As the people participating was quite diverse, the hour reserved for the triage ended with a discussion on a schema change. This high level discussion was nice because of the pragmatic way the consequences were discussed. A log of this triage has been published. Read it, it may whet your appetite to get involved in MediaWiki and language support.

Thanks,

GerardM

A bug triage has been held for the second time and this time two new people demonstrated their interest in working on language issues.

The process is quite cool; Siebrand really explained the issues in a bug, he indicated what skill level is needed and, what benefits there are to solving a bug. Bug 16175 for instance has to do with the EditPage.php class, something Roan qualified as nasty and Bawolff as something that scares him late at night.. Fixing it may result in a need for many new messages that need urgent localisation. The upside is that it is a great introduction to some of the hard parts of the MediaWiki code.

Not everything was hard; some people indicated that they wanted to get involved without working on code. For them there were changes to the language of messages, the documentation of messages. The bug triage truly provided quite diverse opportunities.

As the people participating was quite diverse, the hour reserved for the triage ended with a discussion on a schema change. This high level discussion was nice because of the pragmatic way the consequences were discussed. A log of this triage has been published. Read it, it may whet your appetite to get involved in MediaWiki and language support.

Thanks,

GerardM

Monday, September 12, 2011

What is New at #Wikipedia

Wikimedia Foundation is no stranger to #GoogleTechTalk. The latest instalment is a presentation by Erik Moeller, Rob Lanphier and Alolita Sharma gave a presentation that just became available on YouTube.

It gives advance information on the technology that is being developed and if you are interested in what MediaWiki may provide us with in the near future, it is a must see. It also provides you with some of the latest thinking on Wikipedia.

Have a look and, enjoy.

Thanks,

GerardM

text

It gives advance information on the technology that is being developed and if you are interested in what MediaWiki may provide us with in the near future, it is a must see. It also provides you with some of the latest thinking on Wikipedia.

Have a look and, enjoy.

Thanks,

GerardM

text

#Localisation rally for #MediaWiki and #Kiwix

The #translatewiki.net localisation rally is finished. The results particularly for Kiwix are great. Thanks to the addition of 2,672 messages Kiwix is now fully localised in 53 languages. This makes it much easier for people to use our content off-line.

When you read the announcement of the results, it is particularly smaller languages that have benefited with the exception of traditional Chinese. When you look at the statistics, there are more languages that could have benefited from the rally and/or from more localisations.

This time the benefit to translators is EUR 37.50. The benefit to the users of the 20 languages however is where this rally makes a real difference.

Thanks,

GerardM

When you read the announcement of the results, it is particularly smaller languages that have benefited with the exception of traditional Chinese. When you look at the statistics, there are more languages that could have benefited from the rally and/or from more localisations.

This time the benefit to translators is EUR 37.50. The benefit to the users of the 20 languages however is where this rally makes a real difference.

Thanks,

GerardM

#Agile the process works !!

The "stand-up meetings" of the Wikimedia L10N team are great. Every day in the European morning we learn briefly about the latest developments, the work done, the work planned for the day. .

Stand-up meetings are part of the agile / scrum software development. They are great because you learn about what the current issues are.

What you are looking for is how things like stand-up meetings are worth their time. We wrestled with support for Narayam in the Chrome browser. Amir was fixing an issue with searching strings and was struggling with Chrome as well. Thanks to the stand-up meeting we learned early on that both Narayam and WikiEditor Search are likely to suffer from the same underlying issue.

The amount of time saved is a benefit. The real benefit is that it helps us to become more of a team. A team that understands that everyone in the team is a resource that can be drawn upon.

Thanks,

GerardM

Stand-up meetings are part of the agile / scrum software development. They are great because you learn about what the current issues are.

What you are looking for is how things like stand-up meetings are worth their time. We wrestled with support for Narayam in the Chrome browser. Amir was fixing an issue with searching strings and was struggling with Chrome as well. Thanks to the stand-up meeting we learned early on that both Narayam and WikiEditor Search are likely to suffer from the same underlying issue.

The amount of time saved is a benefit. The real benefit is that it helps us to become more of a team. A team that understands that everyone in the team is a resource that can be drawn upon.

Thanks,

GerardM

Sunday, September 11, 2011

nine-eleven revisited

This day ten years ago, I was in a shop that had a TV turned on. I saw a plane crash into a skyscraper. The consequences have been horrible. Nearly 3000 people died in this abomination, many many more died as a consequence of the wars that followed.

Today, ten years on, I met a local imam here in Almere. The date of the appointment was quite accidental but we did talk briefly about the September 11th attacks. It is horrible when you consider how a small group of fanatics were allowed to bring so much misery on all Muslims.

Wikipedia was another subject; the need for free and neutral information was considered very important. I may even quote my imam as he said: "the biggest challenge of mankind is ignorance and then illiteracy". We had a look at Wikipedia, both the Arabic and the Dutch Wikipedia, and found that articles that explain Morocco and Islam can do with a lot more tender loving care.

Bringing Muslims to Wikipedia does not need to be more then just reaching out. I indicated that I am happy to give some instructions in Almere. I truly do not care in what language they write, where they come from, their culture. What I care about is that we will grow the sum of available knowledge and share it widely.

Thanks,

GerardM

Today, ten years on, I met a local imam here in Almere. The date of the appointment was quite accidental but we did talk briefly about the September 11th attacks. It is horrible when you consider how a small group of fanatics were allowed to bring so much misery on all Muslims.

Wikipedia was another subject; the need for free and neutral information was considered very important. I may even quote my imam as he said: "the biggest challenge of mankind is ignorance and then illiteracy". We had a look at Wikipedia, both the Arabic and the Dutch Wikipedia, and found that articles that explain Morocco and Islam can do with a lot more tender loving care.

Bringing Muslims to Wikipedia does not need to be more then just reaching out. I indicated that I am happy to give some instructions in Almere. I truly do not care in what language they write, where they come from, their culture. What I care about is that we will grow the sum of available knowledge and share it widely.

Thanks,

GerardM

Thursday, September 08, 2011

#Translatewiki.net best practices

When a user gets new rights, there is always a hiatus between the moment of requesting such rights and giving such rights. Localisers are valuable to us; we do not want to lose any and particularly we have high hopes for any and all new people willing to do the work.

Some of the bureaucrats at translatewiki.net send e-mails to those new users who they grant user rights to, welcoming them and occasionally providing some extra information. In recognition of this practice it is now possible to send e-mail directly from the User rights management special page.

As a bureaucrat you do not really know who you send a mail to. The logging system that is being revamped however will have more of a clue. The User rights log is only one of the logs that will be modernised and support plural and gender. The first four associated messages are now available at translatewiki.

Thanks,

GerardM

Some of the bureaucrats at translatewiki.net send e-mails to those new users who they grant user rights to, welcoming them and occasionally providing some extra information. In recognition of this practice it is now possible to send e-mail directly from the User rights management special page.

As a bureaucrat you do not really know who you send a mail to. The logging system that is being revamped however will have more of a clue. The User rights log is only one of the logs that will be modernised and support plural and gender. The first four associated messages are now available at translatewiki.

Thanks,

GerardM

The name is Bon, James Bon

Santhosh is one of the special agents in the fight to bring language support to the Internet. Identifying him in English is easy; all the characters used to transliterate സന്തോഷ് തോട്ടിങ്ങല് are available to identify Santhosh for who he is.

The last character in his name is the "zwj". According to some, this character is not available for identification purposes. Without the "zwj", the name looks different:

It becomes more interesting when you write Sri Lankha in Singhala. this cannot be done without a "zwj". From a Wikimedia Foundation point of view, the Unicode report "Unicode Identifier and Pattern Syntax" assumes for many languages that they are "aspirational" or "limited" use, is not really workable. Our aim is to have support for all scripts and identifying people by their name; their real name.

As we do identify people, an implementation of this Unicode specification is important to us. Having people like Mr തോട്ടിങ്ങല് in the drivers seat will surely get us a best result. It may even get us a reference implementation.

Thanks,

GerardM

The last character in his name is the "zwj". According to some, this character is not available for identification purposes. Without the "zwj", the name looks different:

- സന്തോഷ് തോട്ടിങ്ങല്

- സന്തോഷ് തോട്ടിങ്ങല്

It becomes more interesting when you write Sri Lankha in Singhala. this cannot be done without a "zwj". From a Wikimedia Foundation point of view, the Unicode report "Unicode Identifier and Pattern Syntax" assumes for many languages that they are "aspirational" or "limited" use, is not really workable. Our aim is to have support for all scripts and identifying people by their name; their real name.

As we do identify people, an implementation of this Unicode specification is important to us. Having people like Mr തോട്ടിങ്ങല് in the drivers seat will surely get us a best result. It may even get us a reference implementation.

Thanks,

GerardM

Wednesday, September 07, 2011

#Localisation for your language on one map

At #translatewiki.net we are experimenting with Semantic MediaWiki. One of the features is the use of maps. We have implemented this for the language portals; in this way people can see where the localisers for a language can be found like in the example below for Hindi.

As you can see, only two of the localisers for Hindi entered their location on their user page. It shows how we change things, we include a new mechanism or thingie and, as it gains appreciation the data shown becomes more inclusive.

One challenge I am now facing is how to have a map with the people who completed their 500 localisations for the rally.

Thanks,

GerardM

As you can see, only two of the localisers for Hindi entered their location on their user page. It shows how we change things, we include a new mechanism or thingie and, as it gains appreciation the data shown becomes more inclusive.

One challenge I am now facing is how to have a map with the people who completed their 500 localisations for the rally.

Thanks,

GerardM

Monday, September 05, 2011

A log fit for reading by its public

Auspicious occasions on #Wikipedia are logged; a new user or moving or deleting an article for instance. The log entries are human readable and consequently they are a combination of unique data and fixed texts.

That is in and off itself a problem; the fixed texts can be replaced by different fixed texts to function as a translation but this does not necessarily make the resulting string a proper sentence. A sentence can be different when Jane does it in stead of Joe and, when she does it twice it can be different again.

It is best practice to address Jane as a female and, acknowledge how much gets done. This means that the logging system, needs to be aware of the gender of the actors and the number of actions. Implementing the necessary changes for this is under way. First the required mechanisms will be build into the logging system. Once this is done, each and every log will be internationalised. This will result in new messages in translatewiki.net and once they are localised, there will be a log fit for reading by its public.

Thanks,

GerardM

That is in and off itself a problem; the fixed texts can be replaced by different fixed texts to function as a translation but this does not necessarily make the resulting string a proper sentence. A sentence can be different when Jane does it in stead of Joe and, when she does it twice it can be different again.

It is best practice to address Jane as a female and, acknowledge how much gets done. This means that the logging system, needs to be aware of the gender of the actors and the number of actions. Implementing the necessary changes for this is under way. First the required mechanisms will be build into the logging system. Once this is done, each and every log will be internationalised. This will result in new messages in translatewiki.net and once they are localised, there will be a log fit for reading by its public.

Thanks,

GerardM

#Localisation rally for #MediaWiki and #Kiwix

The Dutch #Wikimedia chapter sponsors #translatewiki.net regularly to run a translation rally. Such a rally serves multiple purposes;

The translatewiki rallies give an additional incentive to our localisers to do a little bit more. Many languages, including big ones like Spanish or Hindi can do with more effort. Both languages are used as "fall back" languages when the localisation of a language is not adequate but they need to be complete to do their job.

MediaWiki does gain relevant functionality over time; off line support with Kiwix and mobile telephone support now integral to MediaWiki are recent examples. This support is not restricted to Wikipedia, it is available to Wikimedia and non-Wikimedia projects alike.

You can join in the fun of the rally, you can make the user interface in your language more usable. The info can be found here but all your contributions are welcome for any and all the projects we support at translatewiki.

Thanks,

GerardM

- to bring the necessity of localisation to the front

- to support the localisation of MediaWiki and Kiwix

- to make the MediaWiki projects more usable

The translatewiki rallies give an additional incentive to our localisers to do a little bit more. Many languages, including big ones like Spanish or Hindi can do with more effort. Both languages are used as "fall back" languages when the localisation of a language is not adequate but they need to be complete to do their job.

MediaWiki does gain relevant functionality over time; off line support with Kiwix and mobile telephone support now integral to MediaWiki are recent examples. This support is not restricted to Wikipedia, it is available to Wikimedia and non-Wikimedia projects alike.

You can join in the fun of the rally, you can make the user interface in your language more usable. The info can be found here but all your contributions are welcome for any and all the projects we support at translatewiki.

Thanks,

GerardM

Subscribe to:

Posts (Atom)