Thanks,

GerardM

Wednesday, September 30, 2009

A language's journey out of digital exclusion

Kabuverdianu is a language spoken on the islands of Cape Verde. It is a creole language of Portuguese basis, it is the mother tongue of virtually all Cape Verdeans, and it is used as a second language by descendants of Cape Verdeans in other countries. This amounts to almost a million speakers worldwide.1

However, it is still virtually nonexistent in the written and digital world. Even in Cape Verde, where it is the only language spoken on an everyday basis, the official language is still Portuguese, which is used in schools, press, TV and the Internet.

This happens primarily because the language hasn't been standardized. There are many works published in Kabuverdianu, but there's no official grammar or dictionary. Fortunately, there is an official alphabet, which aims to standardize the transcription of the language's sounds to written form, which until now was done following the taste and creativity of each author. But an alphabet is barely enough for a start! Much more needs to be done.

The language had already been assigned an ISO 639-3 code (kea), but that only gives it a name. In order to have a digital existence, a language must have an entry in the CLDR (Common Locale Data Repository), which is a project by the Unicode Consortium aiming to provide basic locale data for all languages in order to allow software to be translated.

This important step in the language's journey to digital existence was now taken. The next CLDR submission window is scheduled for October, so I gathered a few people2 some days ago and we worked on translating the data through AfriGen, a project of ANLoc (the African Network for Localization). It took a while since there is no dictionary we could base on to translate things such as country or currency names. There were also meeting constraints, due to the different time zones we live in. At some point I started to be afraid we might not be able to finish it on time for this release. But yesterday we managed to discuss the last entries, and finally complete the locale! It can now be seen on AfriGen's statistics page, with every data block beautifully at 100%! :)

With this, many things can now be done. Since CLDR is used by many software packages, Kabuverdianu will soon become available as a language to which operating systems and applications can be translated to. We will be able to translate OpenOffice, the Linux and Mac interfaces, Firefox, and even the interface for websites such as Google, YouTube, Wikipedia or Facebook!

And this will be just the beginning! A toast to the bright future of Kabuverdianu.

Thanks for reading!

Waldir

1) adapted from the Wikipedia article Cape Verdean Creole

2) Thanks, Amílcar Tavares and Etelvino Garcia!

Monday, September 28, 2009

What benefit of translatewiki.net as a WMF project ,,

Translatewiki.net tweets. This recent tweet is more then interesting as it indicates that a future where translatewiki.net is a Wikimedia Foundation project is possible. It asks the question, what the benefits and what the drawbacks are of such a move.

Benefits:

It is exciting that translatewiki.net as a WMF project is being considered.. Do you see any other advantages or drawbacks ?

Thanks,

GerardM

Benefits:

- It will make it obvious that the WMF cares about language support

- Translatewiki.net will gain more recognition as a project

- It will make translatewiki.net more sustainable

- It may attract more developers to translatewiki.net

- It will attract more localisers to translatewiki.net

- More open source applications can be localised at translatewiki.net

- The WMF community may want to influence some of the translatewiki.net policies

- eh..

It is exciting that translatewiki.net as a WMF project is being considered.. Do you see any other advantages or drawbacks ?

Thanks,

GerardM

Sunday, September 27, 2009

First numbers on OSM localisation

Siebrand provided me with these statistics on the work that has been done to localise Open Street Map. The project page on translatewiki.net shows a graph with the aggregated numbers. Siebrand also updated the trunk stats for Open Street Map.

Siebrand provided me with these statistics on the work that has been done to localise Open Street Map. The project page on translatewiki.net shows a graph with the aggregated numbers. Siebrand also updated the trunk stats for Open Street Map. When you look at these numbers and at the trunk stats, you will find that some languages now have a full localisation, new are 131 (qqq) messages informing about the message that is to be translated and, OSM started with a lot of localisation work that had already been done.php languageeditstats.php --top=20 --day=20 --ns=1222 hsb 852 fr 589 nl 485 ru 354 nds 324 km 257 sr-ec 195 sv 187 sk 186 es 139 br 139 qqq 131 af 123 no 117 ja 113 pt-br 108 ksh 101 fi 97 hi 83 be-tarask 77

Open Street Map currently has 783 messages to localise.

Thanks,

GerardM

Where do all the anonymous edits come from

I learned from Natalie on IRC about this site; it shows near real time where the latest anonymous Wikipedia edits are coming from..

Thanks,

GerardM

Thanks,

GerardM

Saturday, September 26, 2009

Wikimedia Staff office hours with Sue Gardner

Yesterday night, the first Q&A session on IRC was held with Wikimedia Foundation Office staff and Sue Gardner, the WMF director was available for questions. Such meetings are interesting because it as informative about our community because of the questions asked as because of the answers given..

I asked one question: "How important is growing traffic for our smallest projects". The answer that was given was more about the language versions of Wikipedia then about the smaller projects.. When you consider that Wiktionary is the biggest lexical resource on the Internet it is clear that an answer is of interest.

The answer I got was interesting: "I think our obligation is to focus our energy, for the most part, on the projects that have the greatest potential. And by "potential," I would mean the projects where there is a very large available readership (speakers of the language, internet-connected, literate). I guess I feel we have an obligation to focus our energy where it will make the most difference -- where there is enormous potential. That is easier said than done.."

My question was about growing traffic and it is interesting that the Russian Wikipedia traffic grew 117% in a year. There was a reaction in the chat that this was due to bot generated articles and while this may be true, the number of new editors is also much bigger for the Russians. The Russian localisation is also complete so as I see it, they are really doing well where it counts.

So when potential is the key consideration, the English Wikipedia grew 13% on a yearly basis in traffic and ranked nbr 155 in growth. The Swahili Wikipedia grew 150% and is ranked nbr 10. Obviously growth in percentage is not growth in absolute numbers this is best understood when you realise that all Wikipedias together grew 15%.

There is a potential for growth for the Wikipedias in other languages because they do not cover the most wanted articles yet. We do not even know what people want to read. Concentrating on what people want to read is in my view with improved localisation what will realise the growth of our other Wikipedias.

Thanks,

GerardM

"en.wiki is the largest project and the breadwinner. It can go first"

"is it not a goal of the foundation to try to at least survive?"

I asked one question: "How important is growing traffic for our smallest projects". The answer that was given was more about the language versions of Wikipedia then about the smaller projects.. When you consider that Wiktionary is the biggest lexical resource on the Internet it is clear that an answer is of interest.

The answer I got was interesting: "I think our obligation is to focus our energy, for the most part, on the projects that have the greatest potential. And by "potential," I would mean the projects where there is a very large available readership (speakers of the language, internet-connected, literate). I guess I feel we have an obligation to focus our energy where it will make the most difference -- where there is enormous potential. That is easier said than done.."

My question was about growing traffic and it is interesting that the Russian Wikipedia traffic grew 117% in a year. There was a reaction in the chat that this was due to bot generated articles and while this may be true, the number of new editors is also much bigger for the Russians. The Russian localisation is also complete so as I see it, they are really doing well where it counts.

So when potential is the key consideration, the English Wikipedia grew 13% on a yearly basis in traffic and ranked nbr 155 in growth. The Swahili Wikipedia grew 150% and is ranked nbr 10. Obviously growth in percentage is not growth in absolute numbers this is best understood when you realise that all Wikipedias together grew 15%.

There is a potential for growth for the Wikipedias in other languages because they do not cover the most wanted articles yet. We do not even know what people want to read. Concentrating on what people want to read is in my view with improved localisation what will realise the growth of our other Wikipedias.

Thanks,

GerardM

Friday, September 25, 2009

One category of problems now has a solution

On all the Wikimedia Foundation projects you are invited to "try beta". When you do, you will make use of the experimental changes made by the Usability Initiative. These changes already make a big positive difference.

On all the Wikimedia Foundation projects you are invited to "try beta". When you do, you will make use of the experimental changes made by the Usability Initiative. These changes already make a big positive difference.In the evaluation of the feedback from the beta test, it was found that many people leave the beta when they use a language that is not fully localised. These messages are best localised at translatewiki.net and with LocalisationUpdate now implemented on all WMF projects you will find that localisations are typically available within two days.

When a lack of localisation is no longer an issue, we may find the problems with the software that a developer can deal with. It is for this reason that we ask you to localise these messages. When you are not involved in translatewiki.net yet, please create a user, tell something about yourself on your user page and ask for translator rights.

Thanks,

GerardM

LocalisationUpdate is live

In February I discussed the inability of updating the MediaWiki messages with Tom Maaswinkel. The consequence of this inability was several people did not localise at translatewiki.net. They did not because they did not see the benefits of their work because it took months before the messages became available.

In February I discussed the inability of updating the MediaWiki messages with Tom Maaswinkel. The consequence of this inability was several people did not localise at translatewiki.net. They did not because they did not see the benefits of their work because it took months before the messages became available.What we needed was a system that would compare the English message in SVN with the local message. When they were exactly the same, it would be certain that all the localisations for that message would be valid and could be used. Tom wrote the first version of the LocalisationUpdate and it worked.

When it works for me, it does not mean that it works for somebody else. The next hurdle was to convince people that we need this for all the WMF projects. I talked with Siebrand. He was more realistic then I was; he expected that it would take at least six months before it might go live. We talked with people and it was when Tom came to California that the idea of timely updates was adopted by the WMF.

Several people did a lot of work on it, Roan, Niklas, Brion and in the end it was found that it could only go live after the next software update.. That was at Wikimania. Today the LocalisationUpdate is live and it already provides some 5257 messages. This is already more then twice the number of all the MediaWiki core messages.

The LocalisationUpdate may not be glamorous, but it makes all the difference to the people who localise; they find that their work matters and will now be available in a timely manner.This in turn makes all the difference to our users, they will benefit from the improved usability that is the consequence of the improved localisations.

I want to thank and congratulate Tom, Brion, Roan, Tim and Niklas for their efforts. This is an awesome moment.

Thanks,

GerardM

Thursday, September 24, 2009

Another fine graph

For Wikipedia the number of editors is considered to be a very relevant statistic. We do not have those numbers at translatewiki.net. What we do have is the number of editors on a day.

The graph shows that among other things that our summer rally did not only get us more localisations, more people were also the result. At this moment there is an influx of new people interested in localising OpenStreetMap. The result is that some of the languages are now completely localised while others have started.

At the moment Brion is implementing LocalisationUpdate.. I wonder how this will affect the statistics in the days to come..

Thanks,

GerardM

The graph shows that among other things that our summer rally did not only get us more localisations, more people were also the result. At this moment there is an influx of new people interested in localising OpenStreetMap. The result is that some of the languages are now completely localised while others have started.

At the moment Brion is implementing LocalisationUpdate.. I wonder how this will affect the statistics in the days to come..

Thanks,

GerardM

Wednesday, September 23, 2009

The chart now shows all languages

Yesterday I published this graph created by Dedalus. He has updated it and included all languages that have a Wikipedia. The one with a localisation below 0,2 at the left hand side is Nepal Bhasa Wikipedia it has 54,934 articles and 12.39% of its core messages have been localised.

Thanks,

GerardM

Thanks,

GerardM

LIES, DAMNED LIES AND STATISTICS

Statistics are in and off themselves not that valuable, it is the interpretation of statistics that makes them relevant. When such relevance it ignored, people are accused of "gaming" the system. One of the best examples is the large addition of articles in the Volapük Wikipedia. As a result it became a top 10 sized project in article size to the dismay of many Wikipedians. This resulted in the devaluation of the article numbers for Wikipedia.

The issue with the Volapük approach is that the objective of the addition of these articles was successful; the amount of traffic and consequently the exposure of Volapük increased significantly.

The top 30 Wikipedias get 98.31% of the traffic, and languages like Hindi and Bengali are not part of this. It has been said that we want our "other" languages to do well, it is even one of the "emerging strategic priorities". When growth of our projects is a priority, we should have tools that help us decide what to do. In stead of relying on rhetoric, we could rely on statistics. When we are to rely on numbers, the question becomes what numbers. Even more important is how we will use them.

The problem with statistics is that they are reflective and our need is to energise people. Translatewiki.net shows who was most active in the last 7 days. This activity does not get us more traffic but we know it gives people an incentive to do more and we should use our numbers in this way.

When we want more traffic, our statistics should help us decide what to work on. Most obvious are the articles we do not have, the articles that are not found. At this moment our statistics do not inform us what people are looking for. When we do know, we can write the new articles or create the redirect pages and keep our audience reading our project.

It is feasible to generate a list of newly created articles in the previous month and sort them by the amount of traffic they generated. This helps people focus their attention. Such lists can be easily generated for the smaller projects and it is exactly the smaller projects where these list will have the most impact.

NB this is just one approach that will improve our traffic.. there are others :)

Thanks,

GerardM

The issue with the Volapük approach is that the objective of the addition of these articles was successful; the amount of traffic and consequently the exposure of Volapük increased significantly.

The top 30 Wikipedias get 98.31% of the traffic, and languages like Hindi and Bengali are not part of this. It has been said that we want our "other" languages to do well, it is even one of the "emerging strategic priorities". When growth of our projects is a priority, we should have tools that help us decide what to do. In stead of relying on rhetoric, we could rely on statistics. When we are to rely on numbers, the question becomes what numbers. Even more important is how we will use them.

The problem with statistics is that they are reflective and our need is to energise people. Translatewiki.net shows who was most active in the last 7 days. This activity does not get us more traffic but we know it gives people an incentive to do more and we should use our numbers in this way.

When we want more traffic, our statistics should help us decide what to work on. Most obvious are the articles we do not have, the articles that are not found. At this moment our statistics do not inform us what people are looking for. When we do know, we can write the new articles or create the redirect pages and keep our audience reading our project.

It is feasible to generate a list of newly created articles in the previous month and sort them by the amount of traffic they generated. This helps people focus their attention. Such lists can be easily generated for the smaller projects and it is exactly the smaller projects where these list will have the most impact.

NB this is just one approach that will improve our traffic.. there are others :)

Thanks,

GerardM

LocalisationUpdate went live and then the lights went out

Yesterday the much anticipated LocalisationUpdate extension went live on the servers of the Wikimedia Foundation. This was from my point of view a disaster.

So what happened; Brion installed the LocalisationUpdate software and it invalidated the cache for all projects. This will certainly lead to increased CPU usage. Then he stopped LocalisationUpdate and then it was found that it is not possible to do this gracefully and the system stopped.

The good news is, that everyone is convinced that the functionality of LocalisationUpdate is extremely relevant. Research on the functionality of Usability Initiative for instance showed that the lack of available localisations is what stops the adoption for some languages and, LocalisationUpdate is the only mechanism under consideration that is able to make these available in a timely manner.

When LocalisationUpdate will go live is anybodies guess. The good news is that developers like Roan are involved and are eager to find a solution, the Usability Initiative will certainly be one of the more obvious beneficiaries of having a fully functional LocalisationUpdate but most of all, everyone who uses a WMF project not in English will benefit.

Thanks,

GerardM

So what happened; Brion installed the LocalisationUpdate software and it invalidated the cache for all projects. This will certainly lead to increased CPU usage. Then he stopped LocalisationUpdate and then it was found that it is not possible to do this gracefully and the system stopped.

The good news is, that everyone is convinced that the functionality of LocalisationUpdate is extremely relevant. Research on the functionality of Usability Initiative for instance showed that the lack of available localisations is what stops the adoption for some languages and, LocalisationUpdate is the only mechanism under consideration that is able to make these available in a timely manner.

When LocalisationUpdate will go live is anybodies guess. The good news is that developers like Roan are involved and are eager to find a solution, the Usability Initiative will certainly be one of the more obvious beneficiaries of having a fully functional LocalisationUpdate but most of all, everyone who uses a WMF project not in English will benefit.

Thanks,

GerardM

Tuesday, September 22, 2009

When size is good, a cool million for translatewiki.net

Translatewiki.net has a million translated messages. Actually there are 1.028.784 pages; there are some 15K source messages and there are likely less then 13K other pages. This makes for a cool million translated messages.

Translatewiki.net has a million translated messages. Actually there are 1.028.784 pages; there are some 15K source messages and there are likely less then 13K other pages. This makes for a cool million translated messages.When you compare these statistics with statistics about Wikipedia, they are essentially different. We share the notion that our community is what is most precious in our project but for us it is important to have people who come regularly to our project to work on a finite amount of work for them.

The group statistics tell the story best for MediaWiki; some languages are 100% localised and you will notice from the screen shot that this is achievable for every language big and small.

I received a graph from Dedalus that shows a correlation between the number of articles and the quality of the localisation. In essence all Wikipedias over a certain size are completely localised. It makes sense because how can you appreciate what you are asked to do otherwise?

Thanks,

GerardM

Monday, September 21, 2009

Open Street Map goes translatewiki.net

While the Wikimedia Foundation is preparing the availability of the maps of OpenStreetMap in its projects, I am happy to inform you that the internationalisation and localisation of this great project has found its home at translatewiki.net as well.

While the Wikimedia Foundation is preparing the availability of the maps of OpenStreetMap in its projects, I am happy to inform you that the internationalisation and localisation of this great project has found its home at translatewiki.net as well. The project page at translatewiki.net shows its first localisations and issues like YAML support, plural support in Rails and commit access have been overcome.

A big thank you to Siebrand, Nikerabbit and Aevar who did a lot of work to make this happen.

Thanks,

GerardM

Sunday, September 20, 2009

Wikivoices - Episode 48

Wikivoices episode 48 is different. Typically a Wikivoices episode is typically a topical conversion of a group of people. This time, Adam Cuerden took the initiative to explain how to edit historic sound files.

Wikivoices episode 48 is different. Typically a Wikivoices episode is typically a topical conversion of a group of people. This time, Adam Cuerden took the initiative to explain how to edit historic sound files.He explains what to do with a great performance of Emmy Destin who recorded Rusalka of Antonin Dvorak in 1915 in Campden. Emmy Destin was a prima donna in her time and it is a great performance.

Recording of a phonograph cylinder has its hisses and ticks and Adam does a great job explaining how to use Audacity for the restoration of sound files. Audacity is a great tool for the job, there is an implementation for Windows, Macintosh and Linux and there is a localisation in many languages.

The combination of an explanation in sound and text makes for an exeptional and superb Wikivoices episode.

Thanks,

GerardM

How to collaboratively work on standards

For NEN, the standards organisation in the Netherlands, Wikipedia is considered as a model where a community is engaged. NEN invited me because I had presented at the ISO conference in Milan before.

For NEN, the standards organisation in the Netherlands, Wikipedia is considered as a model where a community is engaged. NEN invited me because I had presented at the ISO conference in Milan before. I presented a few slides about Wikipedia and I added a slide about my view on standards. After this introduction there were two themes.

- How does Wikipedia work and, how does it finance itself

- How can the Wiki way be applied to the development of standards

When you consider developping standards by a community, you change the role of the standards organisation. From a full participant, it changes to the role of a process manager. This means that it guarantees the quality of what is delivered but is not so much involved in the content itself. This change in role, removes the argument why the NEN is not in the business of the certification of the implementation of standards. The argument is that there is a conflict of interest when you both define standards and get paid for assessing these same standards.

When a standards organisation is involved in standard development as a process manager and one who validates and certificates the implementation of standards, there is no longer such a conflict of interest.

The question then becomes what standards would a community wants to develop. I am sure that there are plenty of domains where the expertise of developing standards would be welcomed because standards make things measurable, more concrete.

Thanks,

GerardM

Saturday, September 19, 2009

The absense of standards hurts

I had an appointment at the NEN, the Dutch standards organisation. That did not go as planned. I received a message indicating that the meeting was cancelled.

I had an appointment at the NEN, the Dutch standards organisation. That did not go as planned. I received a message indicating that the meeting was cancelled.At the time of the meeting, I received a telephone call where I was... It turned out that my Google calendar and their Lotus Notes did not talk properly. We made a new appointment.

When I arrived at the NEN office, the receptionist told me "we expected you two hours earlier". Again, I was bitten by the incompatibility between two calendar systems. I may not have researched if there is an applicable standard but I definitely feel the need.

Thanks,

GerardM

Sometimes it is better when software does not work at all

MediaWiki supports pictures saved in the PNG format.. after a fashion; thumbnails for PNG files will not generate properly when the original file is bigger then 12,5 megapixel. It is understandable when admins delete a file that is broken. Reasoned but problematic actually even unacceptable for the reasons given below.

Pictures saved in the PNG files can be saved without losses. This means that the quality of a picture will not degrade never mind how often you save it. This allows for the collaborative editing that is so much part of our ethos. Collaborative editing is what makes Wikipedia what it is. The majority of the pictures at Commons have been saved in the JPG format it is only because the pictures are never updated that the quality does not deteriorate.

For people who work on our pictures, lossless formats like TIFF and PNG are preferred. The PNG format is particularly nice because it is well supported on many computer platforms and in many applications. It is an ideal format for people who scan high resolution pictures from print, folders or books.

The best practice for restorations asks us to retain original material and this is irrespective if a scan was done by a GLAM or a Wikimedian like Shoemaker's Holiday. It is utterly frustrating when on the one hand support for the TIFF support is promised while the PNG format that is as deserving fails us because it has only partial support.

Technically the PNG format is in some ways superior to the TIFF format because it requires less storage. The one reason why we need to support the TIFF format is because the chain of provenance is maintained when we retain a copy of the original material from GLAMs. This has proven a powerful argument that helps GLAMs to consider sharing their content with us. At this moment we do not show our TIFF files at all. This is known and accepted and as such, our TIFF files are safe.

People are not as aware about PNG files and files have been deleted without following the appropriate procedures. Informing about the issue is what needs to be done for now. Fixing this bug is the real solution.

Thanks,

GerardM

Pictures saved in the PNG files can be saved without losses. This means that the quality of a picture will not degrade never mind how often you save it. This allows for the collaborative editing that is so much part of our ethos. Collaborative editing is what makes Wikipedia what it is. The majority of the pictures at Commons have been saved in the JPG format it is only because the pictures are never updated that the quality does not deteriorate.

For people who work on our pictures, lossless formats like TIFF and PNG are preferred. The PNG format is particularly nice because it is well supported on many computer platforms and in many applications. It is an ideal format for people who scan high resolution pictures from print, folders or books.

The best practice for restorations asks us to retain original material and this is irrespective if a scan was done by a GLAM or a Wikimedian like Shoemaker's Holiday. It is utterly frustrating when on the one hand support for the TIFF support is promised while the PNG format that is as deserving fails us because it has only partial support.

Technically the PNG format is in some ways superior to the TIFF format because it requires less storage. The one reason why we need to support the TIFF format is because the chain of provenance is maintained when we retain a copy of the original material from GLAMs. This has proven a powerful argument that helps GLAMs to consider sharing their content with us. At this moment we do not show our TIFF files at all. This is known and accepted and as such, our TIFF files are safe.

People are not as aware about PNG files and files have been deleted without following the appropriate procedures. Informing about the issue is what needs to be done for now. Fixing this bug is the real solution.

Thanks,

GerardM

Two bonuses for FUDforum

FUDforum, one of the applications localised at translatewiki.net, announced that they provide a bonus to the first two people who do a full localisation for their language. I quote their rules:

GerardM

Thanks,

- Pick a language that has less than 50% of its messages translated from here (new languages can be added).

- Translate the remaining messages on the TranslateWiki website (let me know if you need help to get started).

- When done, announce your claim to the bounty here.

- The first two valid claims will be awarded US$100.

- Our decision if final. We will have full discretion to disqualify anyone who in our opinion acts outside the intentions of this bounty.

GerardM

Thursday, September 17, 2009

A 853 MB zip file

When I showed to the Tropenmuseum what kind of restorations we have done, I showed among many others the restauration of the Hotel del Coronado. One of the aspects that got their attention was the quality of the stitching and how many of the technical issues were solved that go with a work that is divided in two.

This is the kind of challenge that sends shivers up my spine. Just consider what it will do to your system when you are to manipulate one such file. Then consider that you have to make 28 stiches and, ensure that it stays whole for all of them.

As I understand it, this story cloth is one of the items that the Tropenmuseum has in stock. Once Durova has finished this work it will be much bigger then the biggest TIFF we have manipulated before. Her work will be available under a free license and it will be a challenge how we will get this file on Commons.

I know that Durova is in for a challenge, but this is a 853 MB zip file containing twentynine TIFF files. These 29 files represent 29 shots of one Indonesian story "cloth" (I do not know the proper English word :) ). This is something that is something like 15 meter long and has been photographed by the Tropenmuseum in one go in one place by moving the cloth along in order to keep the same lighting for the whole session.

This is the kind of challenge that sends shivers up my spine. Just consider what it will do to your system when you are to manipulate one such file. Then consider that you have to make 28 stiches and, ensure that it stays whole for all of them.

As I understand it, this story cloth is one of the items that the Tropenmuseum has in stock. Once Durova has finished this work it will be much bigger then the biggest TIFF we have manipulated before. Her work will be available under a free license and it will be a challenge how we will get this file on Commons.

Thanks,

GerardM

Wednesday, September 16, 2009

Tropenmuseum update

Several noteworthy things are happening about the Tropenmuseum.

- there is an information page about the Tropenmuseum at Commons

- Durova has access to the first of the high resolution scans she requested for restoration (contact her if you are interested in helping with restorations)

- Tropenmuseum decided not to post their exhibition material on a seperate wiki

- they decided to integrate their info in Wikipedia portals on the Maroon

- we want to have portals on the English and the Dutch Wikipedia

- Tropenmuseum is planning with the Vereniging Wikimedia Nederland a Maroon portal workshop in Amsterdam (probably in a weeks time)

GerardM

Tuesday, September 15, 2009

LiquidThreads is inching its way to the finish

LiquidThreads has developed over a long period of time and, it seems to be getting ready for the "big time" and that is great news.

LiquidThreads replaces the existing talk pages. It will make these conversations manageable. Being able to summarise or move a thread.. to quote from what has been said before.. all this makes it much easier to respond and still be understood. I like the notion that it will be possible to move a thread to the talk page of another article.

As the previous incarnation of LiquidThreads is used by sites like WikiEducator, I suggested to people to look there for a talk page on steroids. I am really happy with all the improved functionality and usability.

When you want to experience what it is all about, LiquidThreads is now installed on one of the prototype wikis.

Thanks,

GerardM

LiquidThreads replaces the existing talk pages. It will make these conversations manageable. Being able to summarise or move a thread.. to quote from what has been said before.. all this makes it much easier to respond and still be understood. I like the notion that it will be possible to move a thread to the talk page of another article.

As the previous incarnation of LiquidThreads is used by sites like WikiEducator, I suggested to people to look there for a talk page on steroids. I am really happy with all the improved functionality and usability.

When you want to experience what it is all about, LiquidThreads is now installed on one of the prototype wikis.

Thanks,

GerardM

The library of Philadelphia

We still talk about the time when the library of Alexandria was destroyed. It is still considered one of the worst events in the history of civilised culture; works of great repute by leading authors were lost for ever.

Today I read that the library of Philadelphia will be closed. The buildings, the collections will all still be there. What will be broken is the trust that libraries are there; what will be broken is the practice to take your child to the library to get a book. What may be broken is the constant care of the collections that are uniquely stored part of the Philadelphia museums.

Access of the public to these collections is gone. People are asked to bring in the books they have lend before October 2. When material of these libraries is no longer available to the public, it loses its social relevance while its cultural relevance has lost nothing of its luster.

When libraries need funding, they will seek money wherever they can find it. A good example of this is the recent action of the University of California, Santa Barbara. All their material is now only available at cost and there is a clear distinction between commercial and non commercial offerings.

Much of their material is in the public domain but, they do not have to make public domain material at no cost. There argument that this is to make their digitisation effort sustainable has merit.

It is not a pretty sight to see American culture degenerate.

Thanks,

GerardM

Today I read that the library of Philadelphia will be closed. The buildings, the collections will all still be there. What will be broken is the trust that libraries are there; what will be broken is the practice to take your child to the library to get a book. What may be broken is the constant care of the collections that are uniquely stored part of the Philadelphia museums.

Access of the public to these collections is gone. People are asked to bring in the books they have lend before October 2. When material of these libraries is no longer available to the public, it loses its social relevance while its cultural relevance has lost nothing of its luster.

When libraries need funding, they will seek money wherever they can find it. A good example of this is the recent action of the University of California, Santa Barbara. All their material is now only available at cost and there is a clear distinction between commercial and non commercial offerings.

Much of their material is in the public domain but, they do not have to make public domain material at no cost. There argument that this is to make their digitisation effort sustainable has merit.

It is not a pretty sight to see American culture degenerate.

Thanks,

GerardM

Sunday, September 13, 2009

Best practices, a tiff

Sometimes two best practices can be at odds with each other. It is a best practice to limit the number of posts to mailing lists like the Commons-l. On the other hand, what to do when a best practice is not understood on such a list?

Sometimes two best practices can be at odds with each other. It is a best practice to limit the number of posts to mailing lists like the Commons-l. On the other hand, what to do when a best practice is not understood on such a list?The thread easily goes into a tail spin and this does not help either the usefulness of the list nor does it help promote the best practice that is under consideration. At the same time what is the alternative when the best practice is not yet embedded ?

The great news of the last week is that we will support the TIFF format. TIFF is a format used for storing images including photographs. The one reason why I am so happy with the promissed support of the TIFF format is that it is how GLAM store their digitised collections. They are hesitant in giving us access to their material and one convincing argument for them is that we are happy to get their material as it is complete with all the annotations.

Receiving this material in this way, is a best practice because it provides the provenance that can be compared with how we use citations in Wikipedia. The license that we insist on allows us to make changes to this material but this does not remove the moral obligation to treat this material with respect. This means that we should keep original material separate from the changes we make.

GLAMs typically are sceptical when they hear about out restorations. This changes in appreciation when they learn that we keep a restoration separate from the original and when they see the quality of our restorations. Important for the appreciation of the restorations is that they provide better illustrations then the original material and .. the restorations are just gorgeous.

One final reason why it is good to store the original material is that with this best practice, we provide a believable backup for the material we store as well as the GLAM. The library of Alexandria is not the only resource whose loss is still felt. The Archive of Cologne is a modern example that shows that it does not take war or unrest to lose important resources.

The TIFF support will make the material that we already store visible. That is how we progress from the current situation where we store all the material that we restore in its original format.

Thanks,

GerardM

Friday, September 11, 2009

Thinking about Standards

The Weerribben-Wieden, a domain conserved by Staatsbosbeheer has been certified as a place where sustainable tourism in a nature preservation is practiced. I am happy for Staatsbosbeheer, this is recognition indeed.

The Weerribben-Wieden, a domain conserved by Staatsbosbeheer has been certified as a place where sustainable tourism in a nature preservation is practiced. I am happy for Staatsbosbeheer, this is recognition indeed.It got me thinking about standards and certifications. This is a reward given because Staatsbosbeheer complies with a European standard that formulates a set of requirements. It has obvious value because it indicates to tourists that they can enjoy nature and come back to find that nature will still be there.

As this certification is valuable to Staatsbosbeheer, it might be asked to pay for the certification because certification involves the verification of the practice to the standard. It could be an European organisation that pays because it wants to stimulate compliance with the standard.

Defining a standard, complying with a standard and assessing the compliance of a standard is expensive and, the claim of compliance raises expectations. It is important that a standard can be trusted because when a standard is proven to be a sham, it reduces the trust in other standards.

A standard makes sense when it adds value or when it stimulates a best practice. Standards are considered to be "business oriented" and consequently it is an accepted practice that the consumer is to pay. It is not only businesses who make use of standards and as I for instance am not willing to pay for the text or the certification of a standard, I will largely ignore standards because the details are outside of my reach.

When the practices of a standard body prevent the adoption of standards, you have a worst case scenario. Standard bodies are aware of this but they have been directed by their governments in this way.

What would be a way out of this dilemma ??

Thanks,

GerardM

Thursday, September 10, 2009

The Suriname material of the Tropenmuseum

As promissed, the material of the Tropenmuseum about Suriname and particularly the Maroon has been uploaded to Commons. When I look at the material, I find that it is spectacular for many reasons.

The picture on granman Jankoeso for instance shows the elected head of a people. And, the Maroon are not one big group but many different people with different languages and cultures. The most relevant thing the Maroon have in common are that they are black and are the descendants escaped slavery.

Many pictures of objects are included, what is quite extraodinary is that the Tropenmuseum wants to include quality pictures. Pictures with labels or scans of old dias have been excluded; they may be uploaded when a new picture is available.

An example of a scarf made of Cotton, one of many textiles in the collection ..

I hope you will enjoy this collection as much as I do.

Thanks,

GerardM

- it is not only photos, watercolours and prints, there are also large numbers of pictures of all kinds of objects

- the annotations are often quite big and are very informative

The picture on granman Jankoeso for instance shows the elected head of a people. And, the Maroon are not one big group but many different people with different languages and cultures. The most relevant thing the Maroon have in common are that they are black and are the descendants escaped slavery.

Many pictures of objects are included, what is quite extraodinary is that the Tropenmuseum wants to include quality pictures. Pictures with labels or scans of old dias have been excluded; they may be uploaded when a new picture is available.

An example of a scarf made of Cotton, one of many textiles in the collection ..

I hope you will enjoy this collection as much as I do.

Thanks,

GerardM

Wednesday, September 09, 2009

Uploading of material from the Tropenmuseum is underway

I am really happy to report that the first 2162 pictures of material of the Tropenmuseum are being uploaded at this moment to Commons.

These pictures while important in their own right are in preparation of more material that is to follow.

The pictures can be found here.. These pictures are about the Maroon of Suriname.

Thanks,

GerardM

These pictures while important in their own right are in preparation of more material that is to follow.

The pictures can be found here.. These pictures are about the Maroon of Suriname.

Thanks,

GerardM

The White House supports OpenID

Techcrunch informed me with this news: "US Government To Embrace OpenID, Courtesy Of Google, Yahoo, PayPal Et Al."

I think it is brilliant news for OpenID and I expect it will help make the Internet a different place because your contributions are more likely to be attributed to you. I think this demonstrates that the US government understand what they are doing.

If there is one fly in the ointment, it is the OpenID providers are limited to big cooperations and I am not sure if I want to trust my data to Google, Yahoo, PayPal, AOL, VeriSign, Citi, Equifax, Acxiom, Privo or Wave Systems. I already have an OpenID and I would expect it to work as well.

At that, I would love the Wikimedia Foundation to adopt OpenID. We know it works and I would feel more comfortable when my identity on the Internet is not associated with commercial interests.

Thanks,

GerardM

I think it is brilliant news for OpenID and I expect it will help make the Internet a different place because your contributions are more likely to be attributed to you. I think this demonstrates that the US government understand what they are doing.

If there is one fly in the ointment, it is the OpenID providers are limited to big cooperations and I am not sure if I want to trust my data to Google, Yahoo, PayPal, AOL, VeriSign, Citi, Equifax, Acxiom, Privo or Wave Systems. I already have an OpenID and I would expect it to work as well.

At that, I would love the Wikimedia Foundation to adopt OpenID. We know it works and I would feel more comfortable when my identity on the Internet is not associated with commercial interests.

Thanks,

GerardM

Tuesday, September 08, 2009

Categorising the most read articles

I loved Jimmy's presentation at Wikimania. The slide I liked best is this one:

It shows a categorisation of the 100 most popular pages of some of the bigger Wikipedias. The premise is that some of the Wikipedias have moved away from the traditional encyclopaedic content.

At Wikimania I asked Bellayet of the Bengali Wikipedia to provide me with the numbers for his project. These numbers indicate that encyclopaedic information are really popular.

It would be interesting to learn what articles people are looking for that they do not find. I would not be surprised when the most missed articles show what people are looking for. When these articles have been written, it will be interesting to learn how popular these articles turn out to be. Not only from internal searches but also when found through search engines.

Thanks,

GerardM

It shows a categorisation of the 100 most popular pages of some of the bigger Wikipedias. The premise is that some of the Wikipedias have moved away from the traditional encyclopaedic content.

At Wikimania I asked Bellayet of the Bengali Wikipedia to provide me with the numbers for his project. These numbers indicate that encyclopaedic information are really popular.

Geography 23

Science and Tech. 19

Other 16

History and historical figures 14

Culture and the arts 8

Politics and Socity 6

Sex 6

Popular Culture 4

Mathematics 3

Health 1

Current Events 0

It would be interesting to learn what articles people are looking for that they do not find. I would not be surprised when the most missed articles show what people are looking for. When these articles have been written, it will be interesting to learn how popular these articles turn out to be. Not only from internal searches but also when found through search engines.

Thanks,

GerardM

Reality check; looking at the numbers

The 10 biggest Wikipedias generate 92.65% of the Wikipedia traffic. These 10 languages are written in three scripts: Latin, Cyrillic and the Japanse writing system. When you look at the next 10 languages, there are three additional scripts; Chinese, Thai and Arabic.

When you look at the top 20 languages on view, you find that some are growing really fast; Russian at 117%, Arabic at 44% and Indonesian at 32% this can be compared to the growth for English of 13%. Some are not doing well; German and Dutch at 4%, Finnish at 2%.

The English Wikipedia is the 155th in growth of traffic with 13% but as it gets most of the traffic anyway (53,54%). All the other projects together only add 2% more traffic to a total of 15% growth in traffic.

When I look at what I remember of the group statistics at translatewiki.net, I get the impression that the languages that improved their localisation have increased their traffic as well. I am also quite pleased with the growth of the newly created Wikipedias. Pontic is the sad exception.

So what does this all mean.. The top 30 languages, with seven scripts represent 98,32% of our traffic. When you look at the rest, you find that many languages are growing quite nicely and are known to have really active communities. These beautifully compiled numbers by Erik Zachte are nice because they do prevent the easy comparisons based on article counts.

For me it does not change much, because I do not know yet how to interpret these numbers. There is still so much I do not know like where does the traffic come from and what is the percentage of people reading a Wikipedia as their second language. I am still looking for a better understanding of the relation between traffic numbers and our localisation numbers and finally I am looking what makes a project take off, what are the inflection points and what factors affect it.

Thanks,

GerardM

When you look at the top 20 languages on view, you find that some are growing really fast; Russian at 117%, Arabic at 44% and Indonesian at 32% this can be compared to the growth for English of 13%. Some are not doing well; German and Dutch at 4%, Finnish at 2%.

The English Wikipedia is the 155th in growth of traffic with 13% but as it gets most of the traffic anyway (53,54%). All the other projects together only add 2% more traffic to a total of 15% growth in traffic.

When I look at what I remember of the group statistics at translatewiki.net, I get the impression that the languages that improved their localisation have increased their traffic as well. I am also quite pleased with the growth of the newly created Wikipedias. Pontic is the sad exception.

So what does this all mean.. The top 30 languages, with seven scripts represent 98,32% of our traffic. When you look at the rest, you find that many languages are growing quite nicely and are known to have really active communities. These beautifully compiled numbers by Erik Zachte are nice because they do prevent the easy comparisons based on article counts.

For me it does not change much, because I do not know yet how to interpret these numbers. There is still so much I do not know like where does the traffic come from and what is the percentage of people reading a Wikipedia as their second language. I am still looking for a better understanding of the relation between traffic numbers and our localisation numbers and finally I am looking what makes a project take off, what are the inflection points and what factors affect it.

Thanks,

GerardM

Request for help and there is more to it even when your language is out of Africa...

Anloc, the African nework for Localisations is seeking help to add as much information as possible to the locale information about African languages. They want as many people as possible to come to their project and provide information like translations of languages, the sorting order, how you write a number, a date or the time.

There is some urgency because on October first, the deadline for new languages and new information expires for inclusion in the CLDR or the Common Locale Data Repository. Open Office for instance relies on this data in order to support a language.

There is more!! We use this information in the MediaWiki software and we are really reluctant to compile this information in translatewiki.net as well. So our request is go to Unicode's CLDR website and see if the data about your language or in your language can be perfected.

Thanks,

GerardM

There is some urgency because on October first, the deadline for new languages and new information expires for inclusion in the CLDR or the Common Locale Data Repository. Open Office for instance relies on this data in order to support a language.

There is more!! We use this information in the MediaWiki software and we are really reluctant to compile this information in translatewiki.net as well. So our request is go to Unicode's CLDR website and see if the data about your language or in your language can be perfected.

Thanks,

GerardM

Babel templates on the Karalapak Wikipedia

These is the Babel information of Atabek. He is active on both translatewiki.net and on the Karakalpak Wikipedia.

The Karakalpak Wikipedia is a young one and, Atabek is apparantly of the opinion that providing information about his language skills is important. He imported the templates that are necessary to provide information about himself. He did not create several categories and other stuff and consequently his Babel info looks rather red.

The Karakalpak Wikipedia is a young one and, Atabek is apparantly of the opinion that providing information about his language skills is important. He imported the templates that are necessary to provide information about himself. He did not create several categories and other stuff and consequently his Babel info looks rather red.Had the Babel extension been available on the Karakalpak Wikipedia, I could add my Babel information as well. As it is, I do not want to burden a project that should concentrate on growing its number of articles.

Providing the functionality of the Babel extension seems obvious. It is used at translatewiki.net and other Wikis.. What does it take for the Wikimedia Foundation to accept it?

Thanks,

GerardM

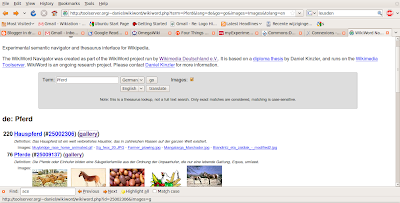

Wikiword navigator

Duesentrieb showed his latest version of his Wikiword software. I played with it and it did not really work for me. There was however one feature I loved; showing pictures with the concepts used. This is so exiting because it may help us find the holy grail for Commons; finding pictures using "other" languages.

There was also the "old" version of the software that did not have the pictures in there.. He just added it and made my day..

There was also the "old" version of the software that did not have the pictures in there.. He just added it and made my day..

Thanks,

GerardM

Monday, September 07, 2009

Becoming a "best practice"

At translatewiki.net we support an increasing number of applications. It all started with MediaWiki and more and more applications followed. We are currently working on supporting OpenStreetMap this is a tough nut to crack as there are three issues that still need to be resolved.

Supporting OpenStreetMap makes sense on many levels, the more obvious one is that as OSM will be used in Wikimedia Foundation projects our community will find it a compelling application to support. What is more surprising is that as translatewiki.net supports more applications it becomes more of a "best practice" for supporting languages.

According to the Network to Promote Linguistic Diversity, some 50 million of all Europeans or 10% of Europe's population speak a regional or minority language. The NPLD supports the "best practices" that help these languages. We have been corresponding about this with one of our localisers who is professionally involved in supporting his language. He indicated that our strenghts are:

The talks about the NPLD and its possible involvement are still very much in the early stages. For me the "take home message" is first and foremost that our work is being appreciated and considered to be a best practice.

Thanks,

GerardM

Supporting OpenStreetMap makes sense on many levels, the more obvious one is that as OSM will be used in Wikimedia Foundation projects our community will find it a compelling application to support. What is more surprising is that as translatewiki.net supports more applications it becomes more of a "best practice" for supporting languages.

According to the Network to Promote Linguistic Diversity, some 50 million of all Europeans or 10% of Europe's population speak a regional or minority language. The NPLD supports the "best practices" that help these languages. We have been corresponding about this with one of our localisers who is professionally involved in supporting his language. He indicated that our strenghts are:

- We are dedicated to internationalisation and localisation

- We have a community of localisers

- Our community does support many Open Source applications

The talks about the NPLD and its possible involvement are still very much in the early stages. For me the "take home message" is first and foremost that our work is being appreciated and considered to be a best practice.

Thanks,

GerardM

Friday, September 04, 2009

Supporting MediaWiki extensions

Translatewiki.net supports MediaWiki and its extensions. There are many extensions actively used and some extensions may not be used at all. We do not really know. What we do know is that some have not been updated for a long time.

The PovWatch extension for instance has not been updated since June 2006 and, it is not clear if this functionality works at all and if so for what releases of MediaWiki. It may be that this extension has never been used. It is not clear that they they have been tested for their functionality.. All this makes extensions like this problematic for Wiki administrators and users alike.

There may be more extensions that are not used but are localised at translatewiki.net. Removing such extensions prevents our localisers from wasting their time.

You can help us by identifying the extensions that are no longer relevant.

Thanks,

GerardM

The PovWatch extension for instance has not been updated since June 2006 and, it is not clear if this functionality works at all and if so for what releases of MediaWiki. It may be that this extension has never been used. It is not clear that they they have been tested for their functionality.. All this makes extensions like this problematic for Wiki administrators and users alike.

There may be more extensions that are not used but are localised at translatewiki.net. Removing such extensions prevents our localisers from wasting their time.

You can help us by identifying the extensions that are no longer relevant.

Thanks,

GerardM

Thursday, September 03, 2009

Facebook is trying to be evil

Facebook wants to patent the idea of crowdsourced translations for social networks. They applied for a patent in December 2008. If they were to look for existing technology, they would find that translatewiki.net has been doing exactly that for a couple of years prior to this date.

I heard the news from several sources, I liked the way Lisa brought the news in their "Globalisation Insider" best. It is well worth a read.

Thanks,

GerardM

I heard the news from several sources, I liked the way Lisa brought the news in their "Globalisation Insider" best. It is well worth a read.

Thanks,

GerardM

Tuesday, September 01, 2009

Translatewiki.net update

With a new month, the "group statistics in time" of translatewiki.net are as intersting as ever.. This time the effects of the localisation rally are evident. All the categories have improved considerably.

With a new month, the "group statistics in time" of translatewiki.net are as intersting as ever.. This time the effects of the localisation rally are evident. All the categories have improved considerably.The one thing that is odd is the decrease of the number of languages. The Picard language was added and Iriga Bicolano, Palembang, Tamazight and Eastern Yiddish were removed. There was no activity for these languages at all.

Communicating about a project like translatewiki is vitally important. We need to tell what is happening and we have been doing that with a newsletter and two blogs. That does not mean that there may be more that could be said. Siebrand has started with the translatewiki twitter account.

Thanks,

GerardM

Subscribe to:

Comments (Atom)